What is TinyML? The Complete Guide to Embedded Machine Learning

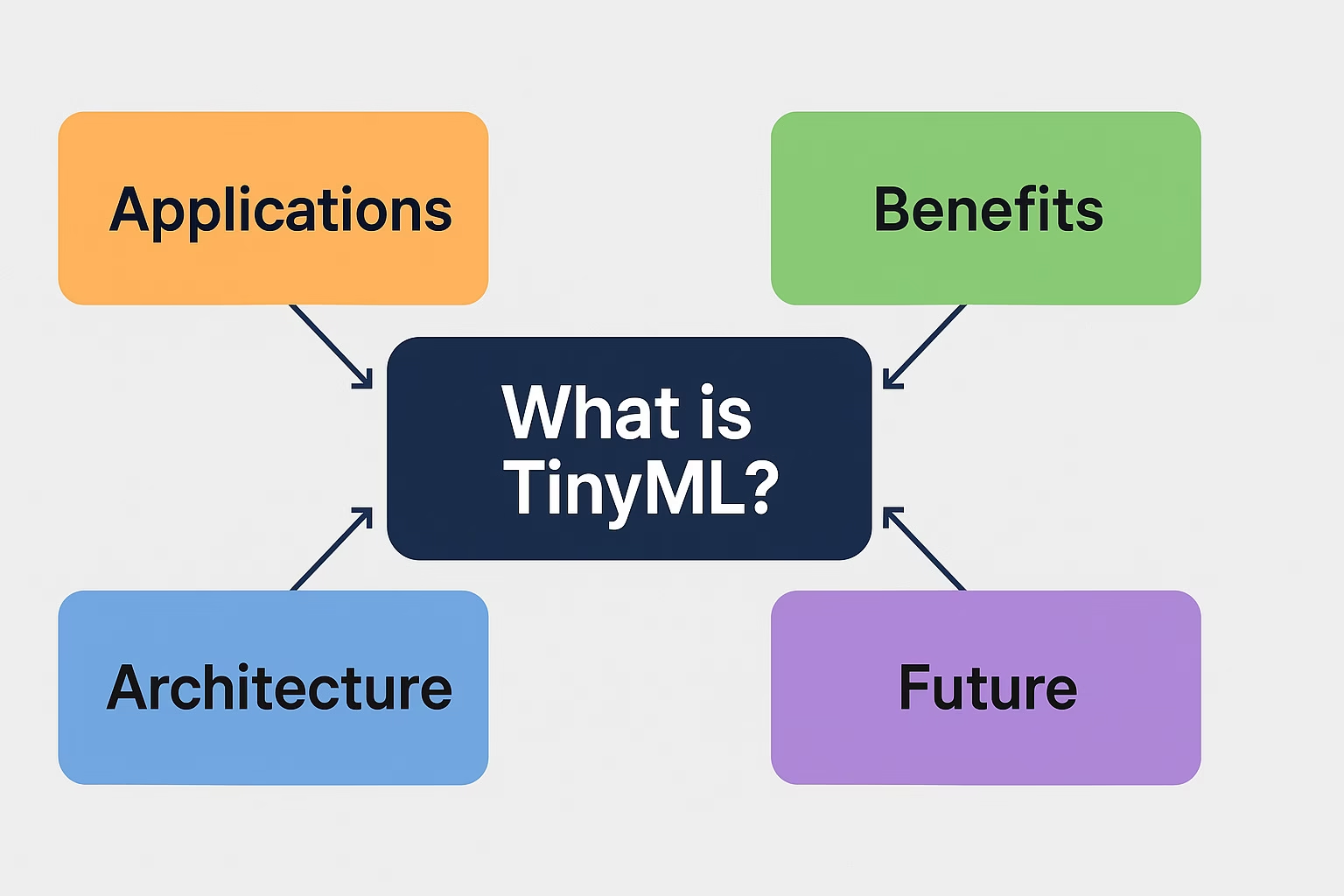

TinyML is a cutting-edge technology that brings machine learning capabilities to tiny, low-power devices. By embedding intelligence directly into hardware like sensors and microcontrollers, TinyML allows real-time decision-making at the edge. In this guide, we’ll explore what TinyML is, how it works, its applications, architecture, and the future it’s shaping.

Machine learning traditionally requires powerful cloud servers to process data. However, with the rise of the Internet of Things (IoT), it became essential to process data locally due to latency, privacy, and energy constraints. TinyML solves this challenge by running ML models on microcontrollers with minimal power and memory.

This paradigm shift enables devices to perform intelligent tasks without constantly connecting to the internet. From smart home devices to wearable health monitors, TinyML is unlocking a new era of responsive and autonomous edge computing.

Understanding TinyML Technology: What Makes It Unique?

Definition and Core Concepts of TinyML

TinyML refers to the deployment of machine learning algorithms on ultra-low-power hardware like microcontrollers, typically using less than 1mW of power. These devices are capable of sensing, analyzing, and acting on data without relying on cloud infrastructure. This opens the door for innovation in fields where connectivity is limited or latency is critical.

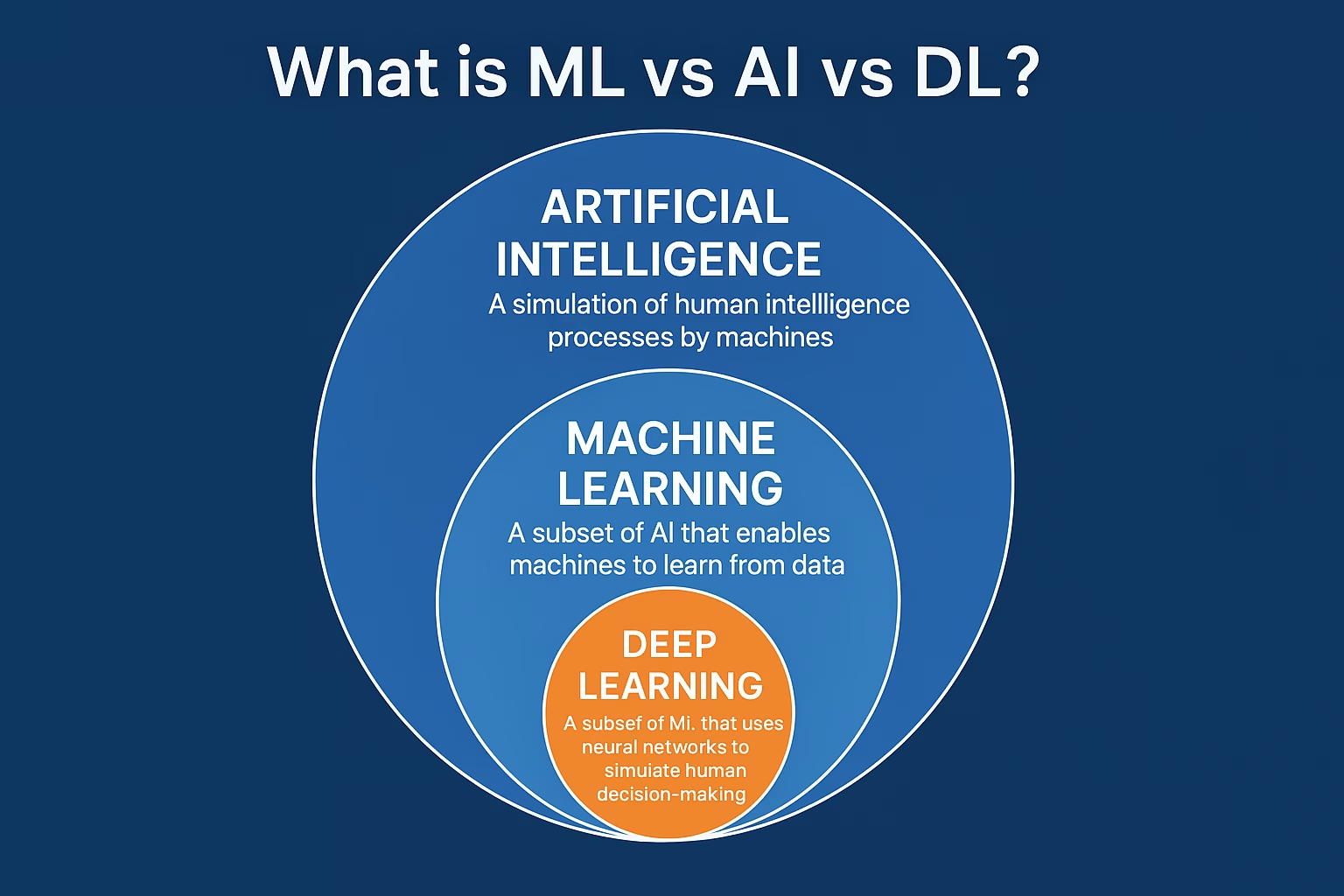

Comparison with Traditional Machine Learning

Traditional machine learning pipelines rely on data collection, cloud processing, and delayed responses. TinyML breaks this dependency by enabling local data processing. This approach not only speeds up response times but also protects sensitive user data by keeping it on-device. Unlike high-resource ML systems, TinyML models are compact and optimized for limited memory and processing capabilities.

Why TinyML is Growing in Importance

The push for decentralization, privacy, and energy-efficient computing has driven widespread adoption of TinyML. As edge devices proliferate across industries, the demand for real-time AI at the source of data continues to surge. TinyML provides the perfect solution by combining intelligence, efficiency, and autonomy into a single embedded system.

TinyML Applications Across Industries

Consumer Electronics and Smart Homes

TinyML is making smart homes even smarter. Devices like thermostats, lights, and security cameras now use embedded ML to recognize patterns, detect anomalies, and respond to user behavior in real-time. These devices operate independently of cloud services, making them faster and more secure.

Healthcare and Wearables

In the healthcare space, TinyML powers wearable devices that track vital signs, detect falls, or monitor chronic conditions. Since these devices process data locally, they provide immediate feedback while preserving patient privacy. For instance, a wearable ECG monitor using TinyML can detect arrhythmias without needing cloud connectivity.

Industrial and Manufacturing Automation

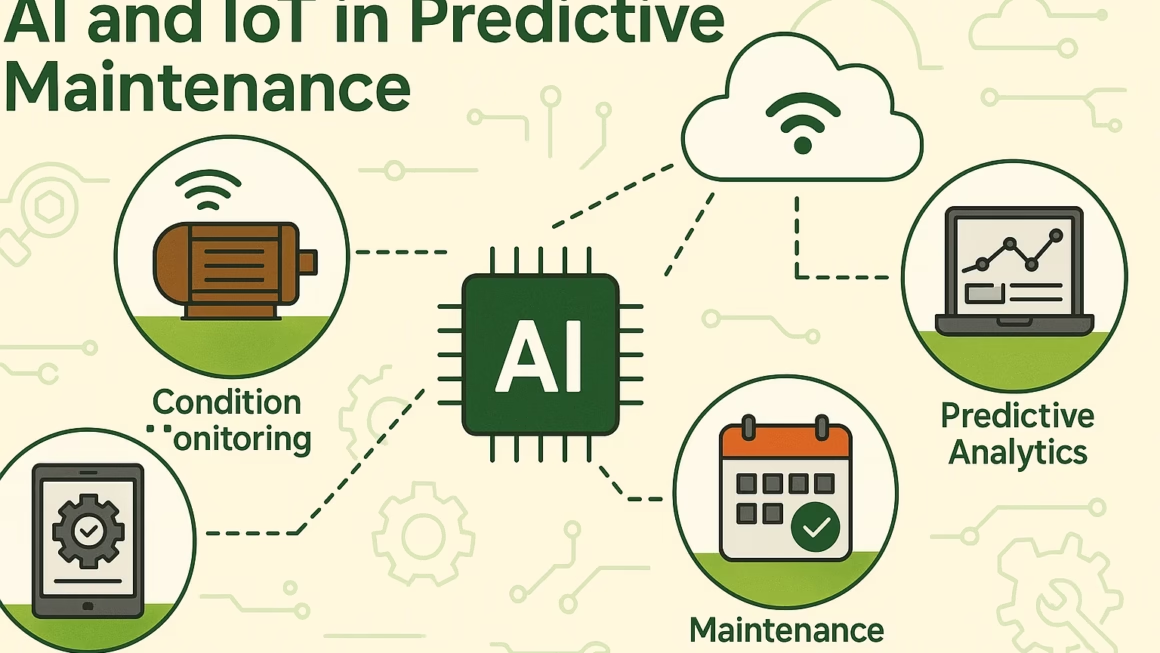

Industrial environments benefit from TinyML by implementing predictive maintenance, vibration analysis, and process optimization. TinyML-enabled sensors can detect equipment failure patterns and alert operators before a breakdown occurs, significantly reducing downtime and repair costs.

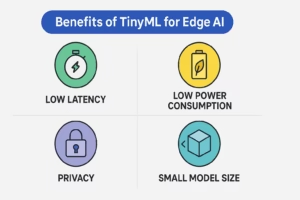

Benefits of TinyML for Edge AI

Low Power Consumption

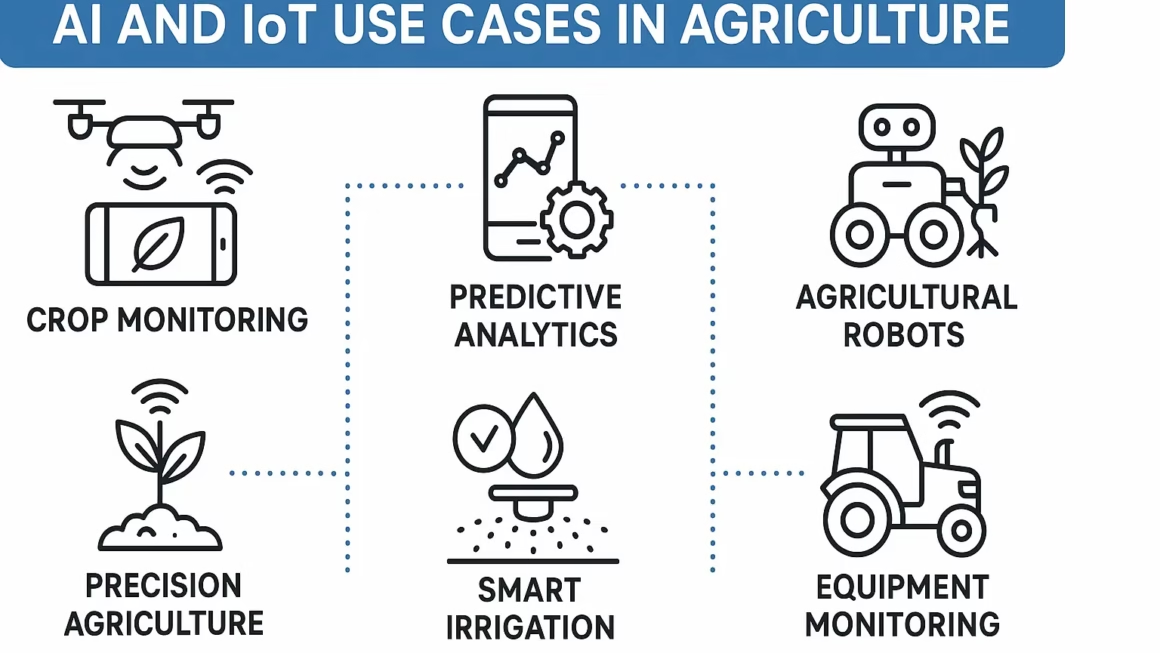

One of the biggest advantages of TinyML is its extremely low power usage. Devices running TinyML models can operate for months or even years on a small battery. This makes it ideal for remote and battery-operated environments like wildlife monitoring or agricultural sensors.

Real-Time Data Processing

By moving intelligence closer to the data source, TinyML minimizes latency. Instead of waiting for a round trip to the cloud, devices can instantly process and react to incoming data. This real-time capability is crucial for applications like autonomous vehicles or safety-critical systems.

Enhanced Privacy and Security

Data never needs to leave the device in a TinyML system, which greatly reduces the risk of interception or misuse. This decentralized approach aligns with modern privacy regulations like GDPR, and builds user trust by keeping sensitive information local.

Cost-Effective Deployment

TinyML solutions often use inexpensive microcontrollers that cost a fraction of traditional processors. Their low power usage also reduces operating costs over time. With minimal infrastructure needs, TinyML offers a scalable and budget-friendly approach to AI deployment.

Scalability and Versatility

From agriculture to aerospace, TinyML’s lightweight footprint allows it to be embedded virtually anywhere. Its flexibility supports a wide range of use cases, and its scalability makes it suitable for both consumer and enterprise applications. Developers can tailor models to specific tasks and hardware, further enhancing performance and efficiency.

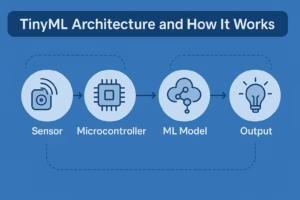

TinyML Architecture and How It Works

Hardware Requirements

TinyML typically runs on microcontrollers such as ARM Cortex-M series or RISC-V-based chips. These processors are optimized for low power and cost while still capable of handling simple ML inference tasks. Often, these devices have just a few hundred kilobytes of memory and a clock speed under 100 MHz.

Model Development and Optimization

Developing a TinyML model involves designing lightweight neural networks that can run efficiently on constrained hardware. Tools like TensorFlow Lite for Microcontrollers (TFLM) and Edge Impulse allow developers to train and quantize models, significantly reducing their size and computational needs.

Deployment and Inference

Once trained, the model is converted to a format compatible with the microcontroller. During runtime, the device collects input (e.g., sound, motion, temperature), feeds it into the model, and takes actions based on the output. This entire cycle happens locally, enabling autonomous behavior even in disconnected environments.[YOUTUBE]

Communication and Integration

Though TinyML devices can work in isolation, they are often integrated into larger systems. For example, a TinyML-based temperature sensor may send occasional summaries to a central dashboard, or trigger an alert via Bluetooth or Wi-Fi if anomalies are detected. Communication protocols must be optimized for energy and bandwidth constraints.

Challenges and Considerations

Despite its benefits, TinyML has limitations. Model complexity is restricted by memory and processing power. Real-time inference may still pose a challenge for more complex tasks. Balancing accuracy, latency, and power consumption remains a critical aspect of TinyML development and deployment.

The Future of TinyML and Embedded AI

Growth in Edge Intelligence

TinyML is playing a key role in the broader trend toward edge computing. As edge infrastructure matures, we will see more advanced AI models deployed on-device, enhancing autonomy, efficiency, and user experience across sectors.

Open Source and Community Development

Open-source platforms like TensorFlow Lite, MicroTVM, and TinyML Foundation are democratizing access to TinyML development tools. As more developers contribute, innovations in compression, quantization, and deployment strategies will accelerate.

Integration with 5G and IoT

5G networks combined with TinyML will enable ultra-low-latency communication between edge devices and the cloud when needed. This synergy can support applications such as remote diagnostics, smart grids, and industrial automation.