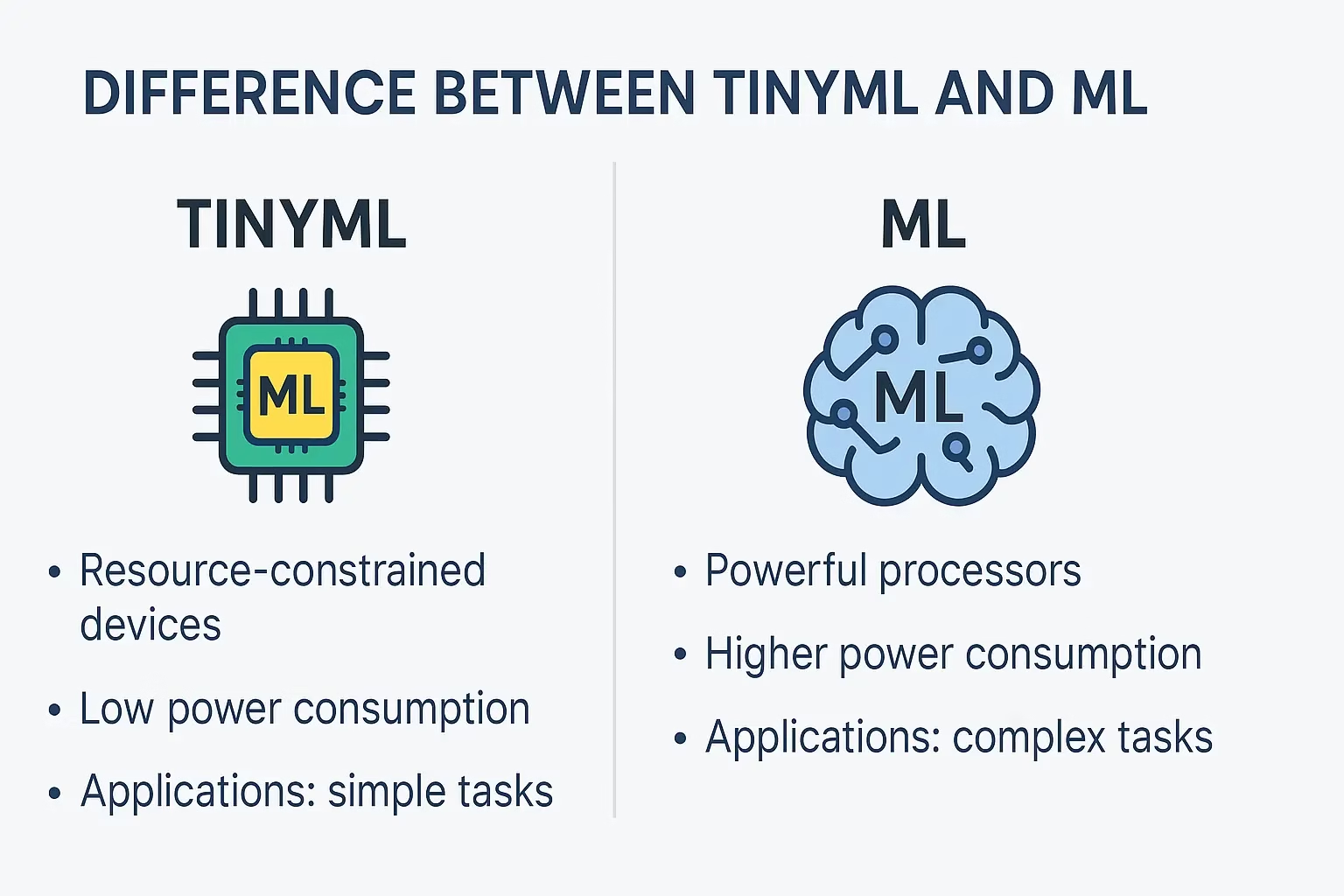

What is the Difference Between TinyML and ML?

What is the Difference Between TinyML and ML? — This is a question gaining traction as artificial intelligence continues to evolve. In this comprehensive guide, we dive deep into the unique characteristics of TinyML and traditional Machine Learning (ML), highlighting their core differences, applications, and future impact. This article aims to provide over 2000 words of detailed insights optimized for SEO.

Machine Learning (ML) has rapidly transformed from a research-centric discipline into a ubiquitous part of modern technology. From fraud detection systems to personalized recommendations, ML enables machines to make decisions without being explicitly programmed. Meanwhile, TinyML brings this intelligence to the edge — small, resource-constrained devices that power real-time, low-latency applications in IoT, wearables, and more.[YOUTUBE]

Understanding the differences between ML and TinyML is essential for developers, engineers, and business leaders planning to integrate AI into modern infrastructures. This article will compare these two forms of machine learning in depth, examining their core technologies, architectures, limitations, and advantages.

Understanding Machine Learning and TinyML

What is Machine Learning?

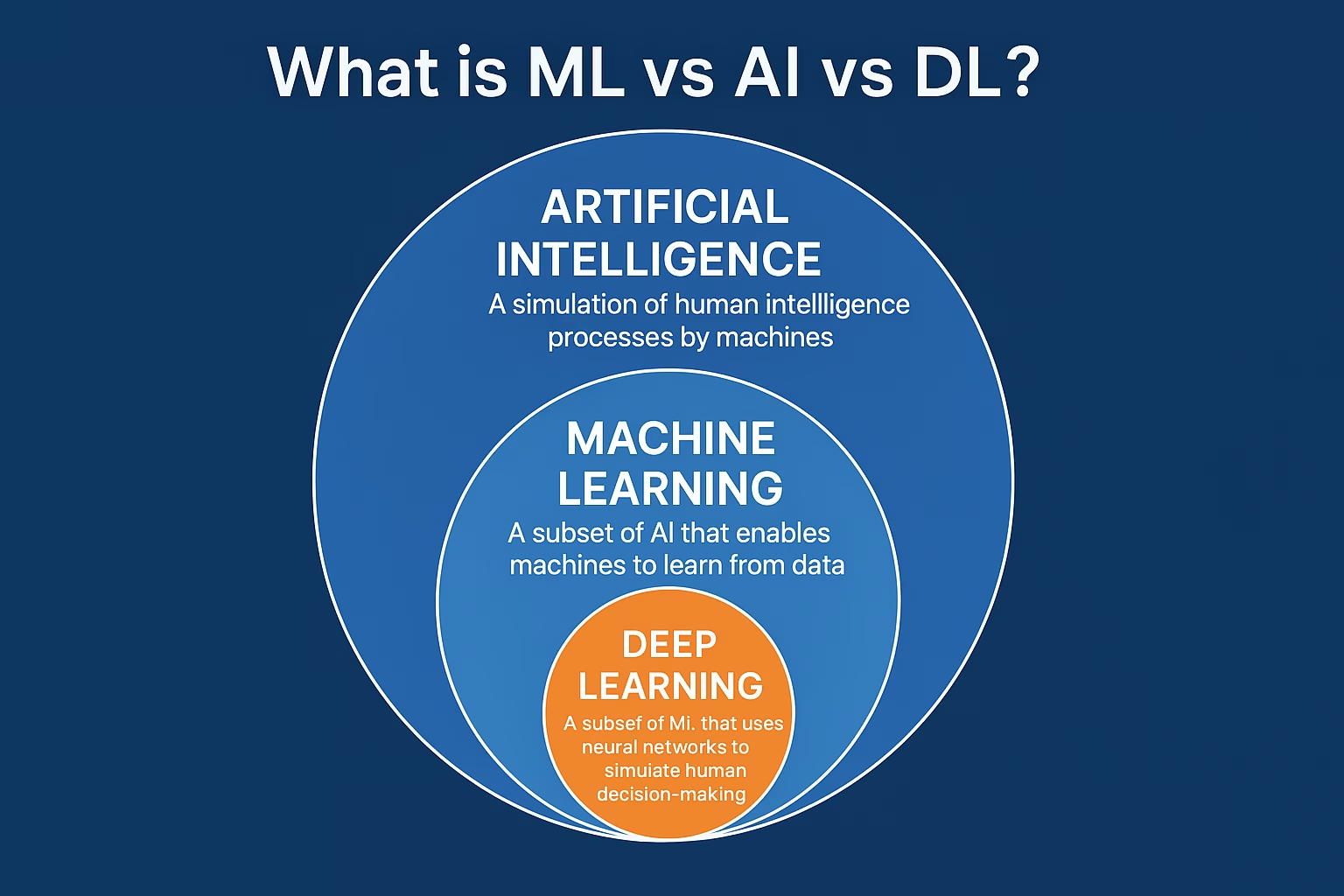

Machine Learning refers to the study and application of algorithms that improve automatically through experience and data. It is a subset of artificial intelligence that includes supervised, unsupervised, and reinforcement learning. ML models require significant computational resources and are often deployed on powerful servers, GPUs, or cloud platforms.

Traditional ML workflows involve data collection, preprocessing, training, testing, and deployment. These models typically rely on vast datasets and computational power, making cloud-based infrastructure a common requirement. ML has been integral in advancing computer vision, NLP, robotics, and recommendation engines.

What is TinyML?

TinyML stands for Tiny Machine Learning and refers to running ML models on extremely low-power microcontrollers and embedded devices. Unlike traditional ML, TinyML is optimized for efficiency, ensuring low latency and low energy consumption. It enables intelligence directly on edge devices like sensors, wearables, and household electronics.

TinyML systems must balance trade-offs such as memory constraints, limited processing power, and low-bandwidth connectivity. Despite the limitations, it is revolutionizing industries like agriculture, healthcare, and industrial automation by making real-time, on-device intelligence a reality.

Key Differences Between TinyML and ML

1. Hardware Requirements

ML typically runs on cloud servers, GPUs, or TPUs that offer high computational throughput. These models can consume gigabytes of RAM and require teraflops of processing power. Examples include training a deep neural network using TensorFlow or PyTorch on a high-performance server cluster.

In contrast, TinyML is tailored for devices with kilobytes of RAM and low MHz processing speeds. Microcontrollers like the ARM Cortex-M series or custom chips like Google’s Edge TPU are examples of TinyML hardware. These systems often operate using only a few milliwatts of power.

2. Model Size and Optimization

Traditional ML models can be hundreds of megabytes in size. They are designed for accuracy and performance with fewer constraints on model size. This flexibility allows engineers to prioritize depth and complexity over efficiency.

TinyML models are typically smaller than 1MB and undergo model compression techniques such as quantization, pruning, and knowledge distillation. These optimizations help retain acceptable performance while meeting resource constraints.

Applications in the Real World TinyML and ML

1. TinyML Applications

TinyML excels in scenarios where real-time processing, privacy, and energy efficiency are critical. Some use cases include:

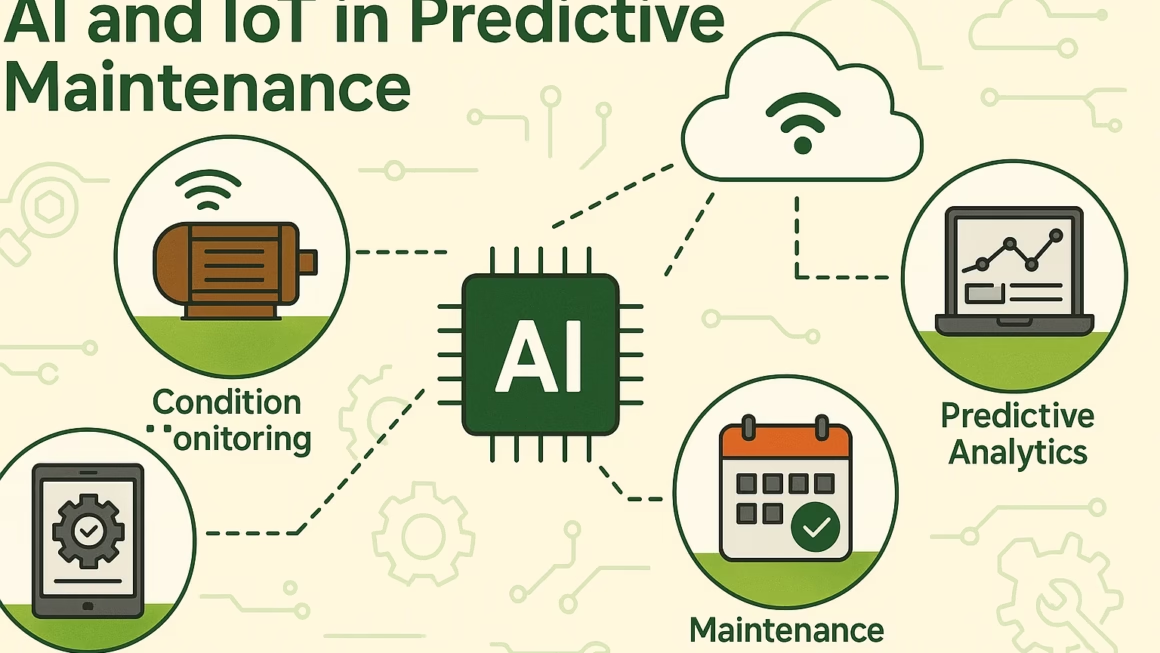

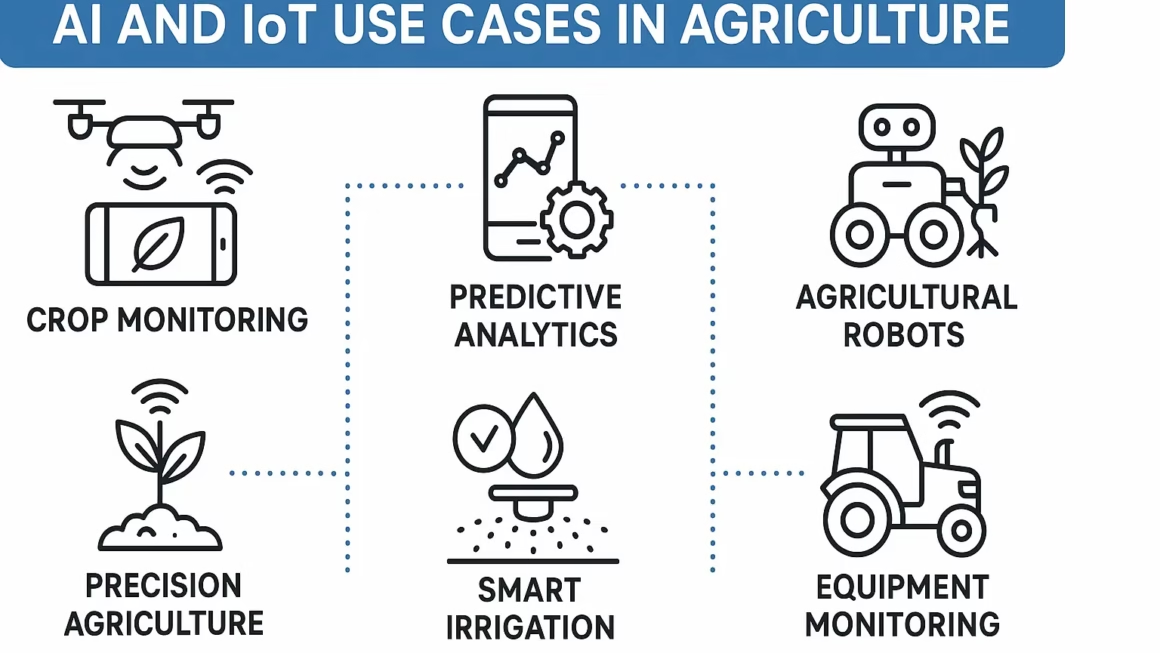

- Smart agriculture: Monitoring soil conditions with embedded sensors.

- Healthcare: Wearable devices for heartbeat anomaly detection.

- Industrial IoT: Real-time fault detection in motors and machinery.

In each of these cases, TinyML removes the need for constant cloud connectivity and reduces data transmission costs, all while ensuring faster response times.

2. Machine Learning Applications

ML applications often include high-complexity tasks that benefit from powerful computation and large-scale datasets:

- Natural Language Processing: Chatbots and voice assistants.

- Computer Vision: Facial recognition and autonomous vehicles.

- Financial Modeling: Predicting stock prices or customer churn.

These systems typically require frequent updates and retraining to remain accurate, which is more feasible in a cloud environment with scalable resources.

Development Tools and Frameworks

Popular Tools for ML

ML developers frequently use frameworks such as TensorFlow, PyTorch, and Scikit-learn. These tools support robust libraries for data preprocessing, model training, and deployment, as well as GPU acceleration for faster experimentation.

Popular Tools for TinyML

TinyML projects rely on lightweight and hardware-optimized versions of traditional ML frameworks. Examples include TensorFlow Lite for Microcontrollers, Edge Impulse, and CMSIS-NN. These frameworks help streamline model conversion, training, and deployment for microcontroller units (MCUs).

Challenges and Future Outlook

Challenges in TinyML

Despite its promise, TinyML faces several challenges, including:

- Limited computing and memory resources.

- Energy constraints for battery-powered devices.

- Difficulty in updating models once deployed in the field.

Solving these issues requires continuous innovation in chip design, model compression, and edge AI frameworks.

Challenges in Traditional ML

Traditional ML also faces obstacles:

- Data privacy concerns with cloud-based processing.

- High costs of infrastructure and scaling.

- Longer response times due to data transmission delays.

However, cloud ML platforms like AWS SageMaker and Google Vertex AI are working to address some of these bottlenecks through automation and cost optimization.

Which One Should You Choose?

When to Choose TinyML

Choose TinyML when your project requires low power consumption, real-time inference, and privacy-preserving data handling. It’s perfect for IoT environments, remote monitoring, and wearable tech.

When to Choose ML

Opt for traditional ML when you have access to rich datasets, need high-accuracy models, and can leverage cloud-based resources. It’s ideal for tasks such as fraud detection, language translation, and large-scale image classification.

Conclusion: TinyML and ML in a Converging World

TinyML and ML are not competing technologies but complementary approaches. As edge computing becomes more mainstream, TinyML will enable scalable intelligence at the edge, while ML continues to power complex decision-making in centralized systems.

Businesses and developers must consider both technologies when designing intelligent systems. Understanding their strengths, limitations, and use cases will ensure more efficient, scalable, and user-centric AI deployments across industries.