GPU Server for Deep Learning

In today’s AI-driven world, a powerful GPU server for deep learning is essential for researchers, data scientists, and AI engineers. From training massive neural networks to deploying real-time inference engines, GPU servers offer the computational backbone for cutting-edge machine learning tasks.

Whether you’re building your own AI infrastructure or choosing a cloud-based solution, understanding the key features and benefits of GPU servers is crucial. This in-depth guide explores everything from hardware requirements to real-world applications of GPU-powered deep learning.

Why a GPU Server is Essential for Deep Learning

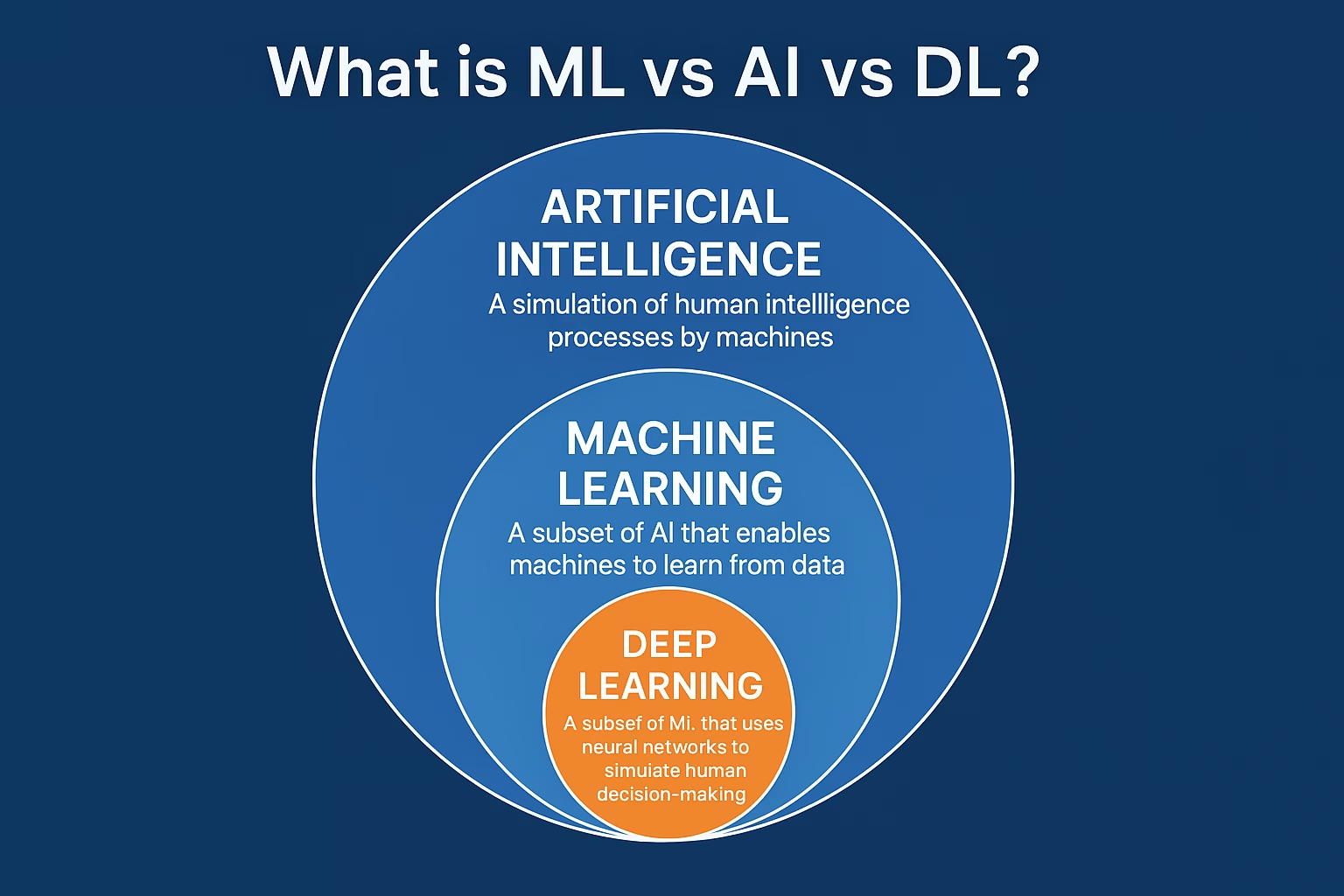

Deep learning models often consist of millions or even billions of parameters, and training them requires immense computational power. While CPUs are suitable for basic computing tasks, they fall short when it comes to the parallel processing needed for matrix operations and backpropagation algorithms. This is where GPU servers come into play.

Graphics Processing Units (GPUs) are designed for high-throughput computing. They can process thousands of operations in parallel, making them ideal for training convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer-based models. By leveraging a GPU server, deep learning tasks that would take days or weeks on a CPU can be completed in a matter of hours.

Key Features of a High-Performance GPU Server for Deep Learning

Choosing the right GPU server involves more than just picking a graphics card. The overall system architecture, memory capacity, cooling system, and scalability options all play a crucial role in performance. A good deep learning server should feature one or more high-end GPUs such as the NVIDIA A100, RTX 6000 Ada, or H100 Tensor Core GPUs.

Additionally, RAM size and storage speed significantly influence training performance. Deep learning workloads often require 256GB or more of RAM and fast NVMe SSD storage to efficiently feed data to the GPUs. Networking capability is also key, especially for distributed training setups where multiple GPU servers collaborate over high-speed links like Infiniband or 100GbE Ethernet.

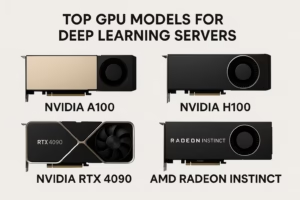

Top GPU Models for Deep Learning Servers

The GPU market is vast, but not all cards are created equal when it comes to AI. NVIDIA dominates the space with a range of cards optimized for deep learning, including the A100, H100, and the RTX series. The A100, for example, offers over 300 TFLOPS of performance and supports mixed-precision training, dramatically speeding up neural network training without sacrificing accuracy.

Other top contenders include the NVIDIA RTX 4090 for smaller-scale setups and AMD Instinct MI300 for enterprises seeking alternatives. For professional-grade tasks, tensor cores and support for libraries like CUDA, cuDNN, and TensorRT are essential features to look for in a GPU.

Scalability is another important factor. Some servers are built to host 4, 8, or even 16 GPUs in a single chassis. These configurations are ideal for training extremely large models or for research labs running multiple experiments concurrently. Ensure the server chassis and motherboard support PCIe Gen 4 or 5 for maximum throughput.

Cloud vs On-Premise GPU Servers for Deep Learning

One of the biggest decisions you’ll face is whether to invest in an on-premise GPU server or utilize cloud-based GPU solutions. Each option has its pros and cons. On-premise servers provide full control, lower long-term costs, and data privacy. However, they require upfront capital, ongoing maintenance, and power/cooling infrastructure.

Cloud GPU services from providers like AWS (with EC2 P4/P5 instances), Google Cloud (A2 instances), and Microsoft Azure offer flexibility and scalability without the hardware burden. These platforms are ideal for startups or businesses that experience fluctuating AI workloads. The downside? Higher long-term costs and potential latency or compliance issues with sensitive data.

Hybrid cloud setups are gaining popularity, where organizations maintain a local server for daily tasks and burst into the cloud during peak demand. This model balances performance, cost, and scalability effectively.

How to Build or Choose the Best GPU Server for Deep Learning

Building your own GPU server allows full customization. Start by selecting a powerful CPU (e.g., AMD EPYC or Intel Xeon), a server-grade motherboard with sufficient PCIe slots, and robust power supplies. Add one or more GPUs depending on your budget and workload. Ensure you include adequate cooling, as GPUs can generate substantial heat under load.

If you prefer a turnkey solution, companies like Lambda, Exxact, Supermicro, and Dell offer pre-configured GPU servers optimized for AI workloads. These systems are tested for compatibility and come with enterprise-level support, which is invaluable for mission-critical deployments.

When purchasing, always consider future expansion. Choose a server chassis that allows for additional GPUs or storage upgrades down the line. Also, verify that the server supports major deep learning frameworks like TensorFlow, PyTorch, and MXNet out of the box.

Common Use Cases for GPU Servers in Deep Learning

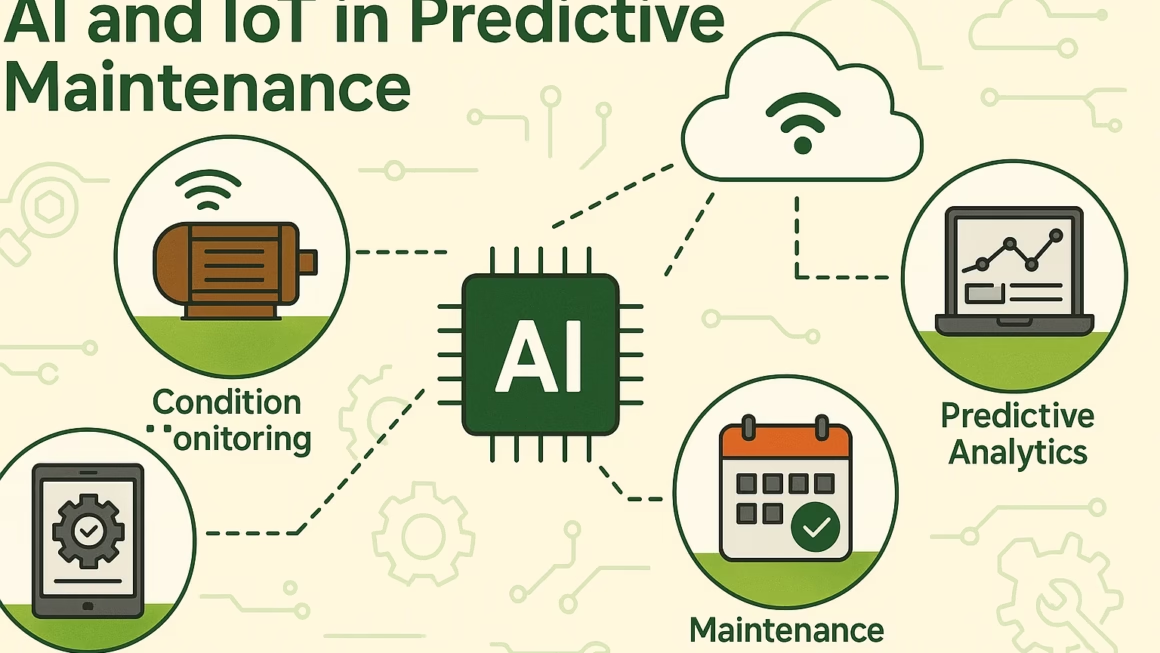

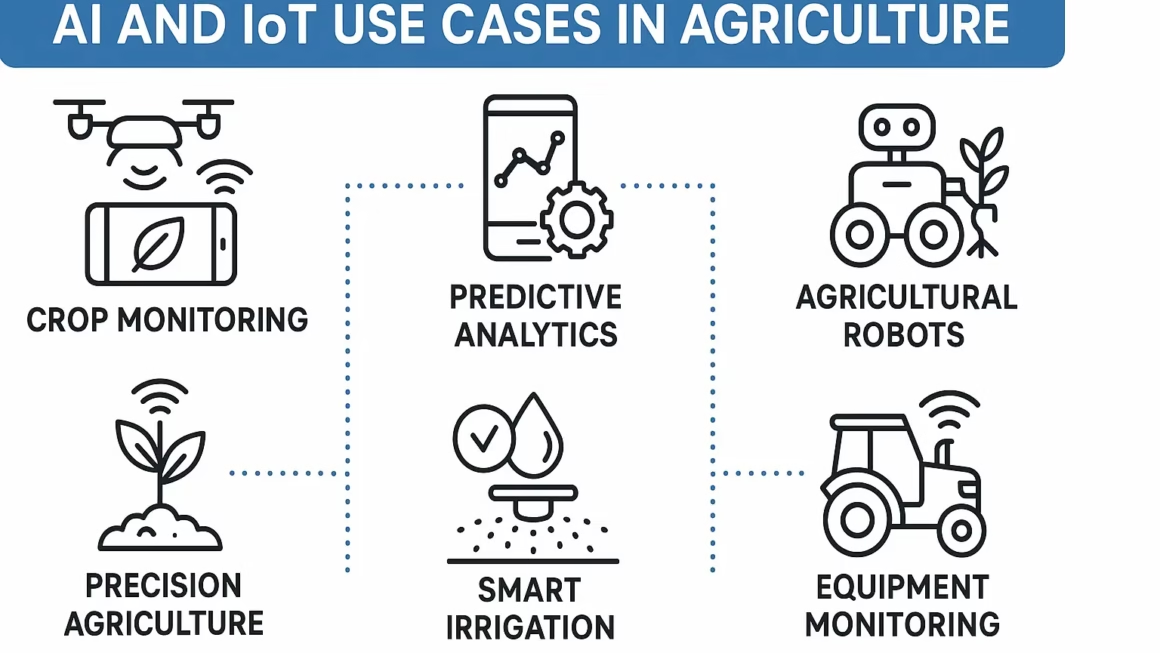

GPU servers are used in a wide range of industries and applications. In healthcare, they’re used to train models for medical image analysis, disease diagnosis, and drug discovery. In autonomous driving, deep learning models trained on GPU servers enable real-time decision-making and environment recognition.

Finance firms use GPU servers to power high-frequency trading algorithms, fraud detection systems, and sentiment analysis tools. In entertainment, deep learning enables real-time voice synthesis, facial animation, and video upscaling—all of which require powerful GPU processing.

Even academia and research institutions rely heavily on GPU servers for simulations, natural language processing, and experimentation with new neural architectures. These servers are the foundation for breakthroughs in AI that drive innovation across every field imaginable.

Best Practices for Maintaining a GPU Server for AI Workloads

Maintaining a GPU server involves both software and hardware considerations. Regularly update your drivers, CUDA toolkit, and deep learning libraries to ensure optimal compatibility and performance. Use monitoring tools like NVIDIA’s DCGM or Prometheus-Grafana stack to track temperature, memory usage, and GPU utilization.

Keep your system clean and well-ventilated to avoid thermal throttling. Dust and heat can reduce performance and lifespan of your hardware. Invest in redundancy for critical components like power supplies and hard drives to minimize downtime.

Security is also crucial, especially in shared environments. Use containerization technologies like Docker with GPU support to isolate workloads, and enforce role-based access control for users. Back up your models and training data regularly to prevent loss in case of hardware failure.

One thought on “Top GPU Server Solutions for Deep Learning Performance”