Equivariant Architectures for Learning in Deep Weight Spaces

Equivariant architectures for learning in deep weight spaces are revolutionizing how neural networks generalize and transfer knowledge. By enforcing symmetry constraints directly in the model architecture, these methods enable efficient learning, robust generalization, and powerful representations, especially in high-dimensional weight spaces of deep networks.

Recent advancements in deep learning have shown that traditional models often overlook the inherent symmetries in data and model weights. Equivariant architectures help capture these symmetries, reducing redundancy and improving efficiency. This concept becomes even more important when operating in deep weight spaces, where the number of parameters can be overwhelming without structured inductive biases.

In this blog, we’ll explore the foundational principles, benefits, and cutting-edge applications of equivariant architectures, particularly when applied to the weight space of deep learning models. We’ll also analyze current research trends, challenges, and the promising future of this evolving field.

Understanding Equivariance in Deep Learning

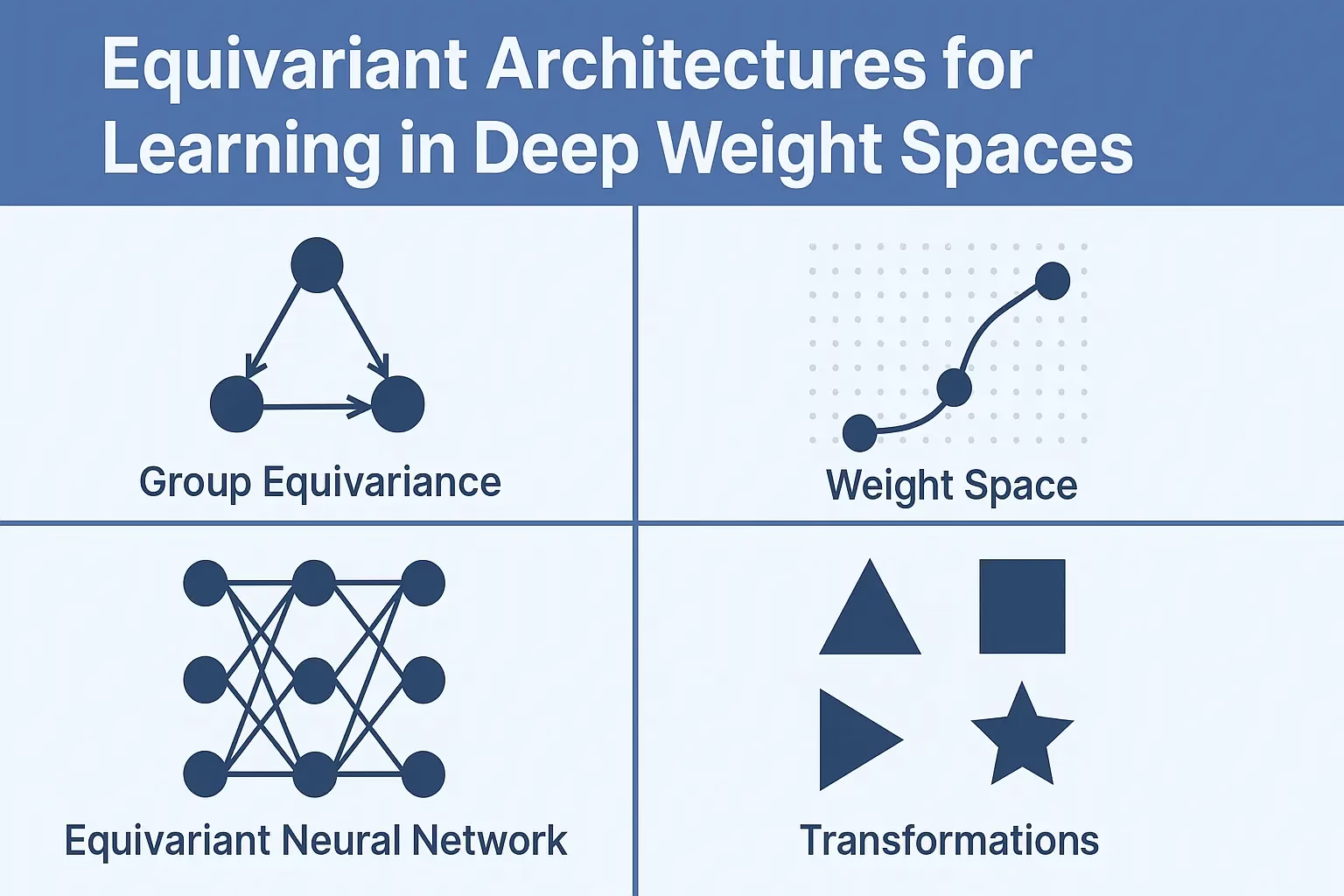

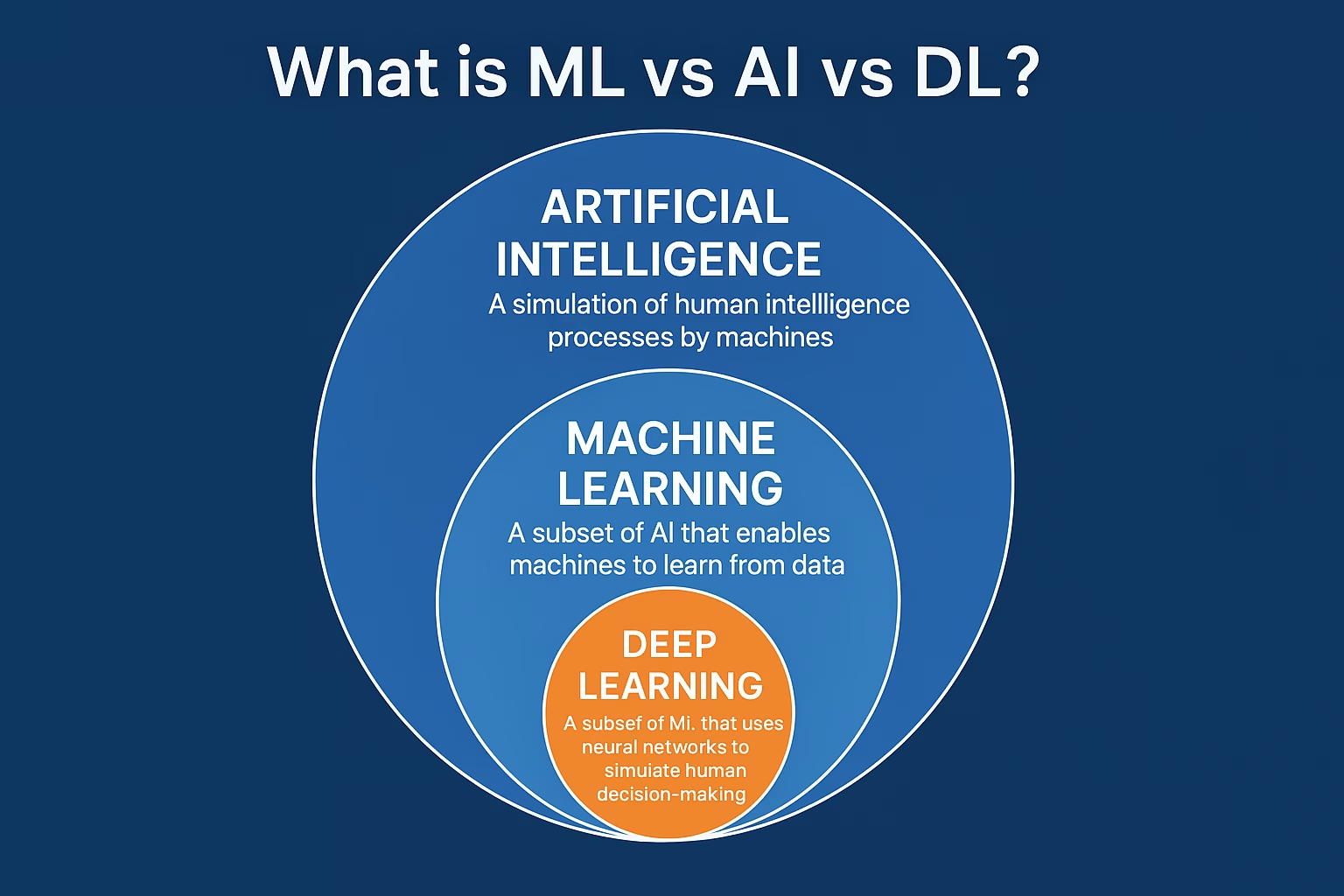

Equivariance in deep learning refers to the property where transformations of the input result in predictable transformations of the output. For example, in convolutional neural networks (CNNs), translation equivariance ensures that a shifted input leads to a correspondingly shifted output, making CNNs effective for image recognition tasks.

Applying equivariance principles to the weight space of deep models enables them to better capture symmetries inherent in the optimization landscape. Instead of treating every weight configuration independently, these models identify and utilize patterns and redundancies, making learning more data-efficient and generalizable across different tasks.

Equivariant Architectures for Learning in Deep Weight Spaces

The core idea behind using equivariant architectures in deep weight spaces is to encode symmetry constraints directly into the learning mechanism. This includes operations that remain consistent under transformations like rotation, reflection, or permutation. Group theory often serves as the mathematical backbone for implementing these constraints.

In high-dimensional weight spaces, these architectures help reduce the effective search space during training. Instead of navigating a massive and often redundant landscape, models guided by equivariance can focus on unique and informative parameter configurations. This leads to faster convergence and more robust models that generalize better.

Group Equivariance and Weight Parameterization

Group-equivariant networks apply transformations derived from symmetry groups, such as SO(3) for rotations or S_n for permutations. These are crucial in scientific domains where data invariance under transformation is a norm. In weight space, parameterizing networks to respect these group transformations creates structured and efficient learning pathways.

Benefits for Transfer Learning

Equivariant models in weight spaces facilitate transfer learning by capturing reusable transformations and structures. When a model trained on one task understands the symmetry of its weight space, it can adapt more rapidly to a related task, needing fewer examples and shorter fine-tuning time.

Architectural Designs and Implementation Strategies

Several architecture types have been proposed to operationalize equivariant principles in deep weight spaces. One approach is to design layers that are inherently equivariant to specific group actions. This ensures that the output transforms predictably based on changes to input or weights.[YOUTUBE]

Steerable CNNs, for example, extend traditional convolutions to respect rotational symmetries. Graph neural networks (GNNs) often incorporate permutation equivariance to model relationships in unordered data. For deep weight spaces, researchers use tensor field networks and Lie group parameterizations to maintain consistent transformations across layers.

Fourier Transform and Spectral Methods

Spectral methods apply Fourier transforms to capture frequency-domain representations of transformations. These are particularly useful in rotationally equivariant networks and can be adapted to analyze the structure of weight spaces, identifying symmetry-based compression opportunities.

Differentiable Group Actions

Integrating differentiable group actions into optimization routines allows networks to learn transformation invariance as part of their training. This enhances the model’s ability to maintain equivariance even as the architecture adapts or scales.

Applications in Physics, Robotics, and Computer Vision

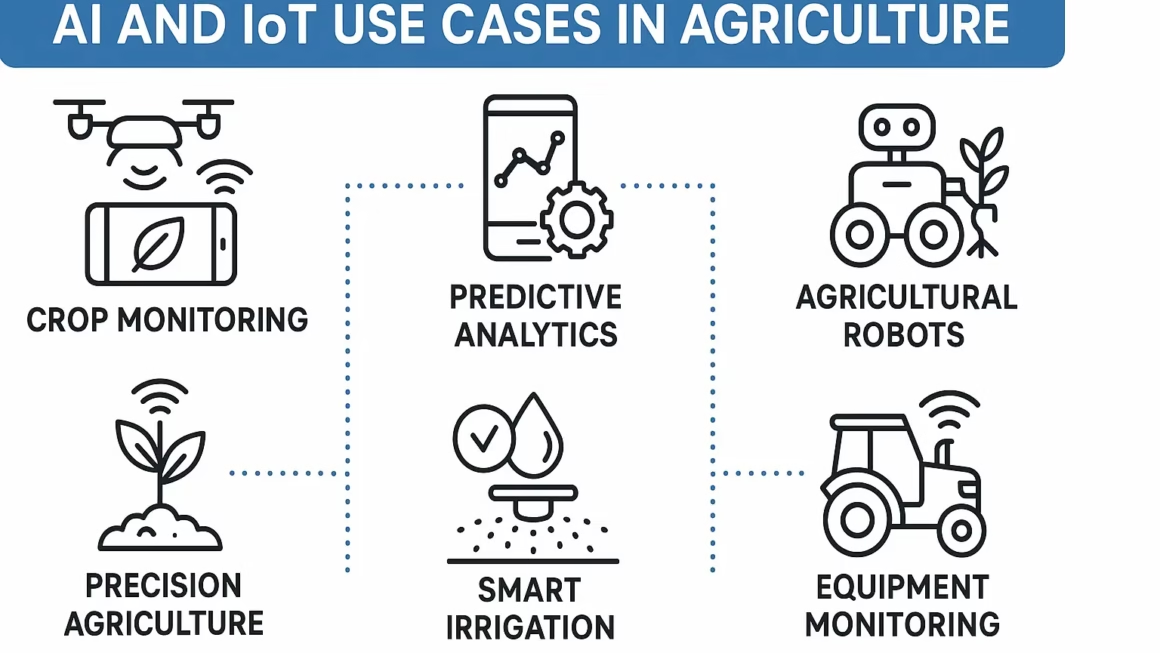

Equivariant architectures shine in domains where data naturally adheres to geometric or physical symmetries. In physics-informed neural networks, respecting conservation laws through symmetry in the weight space leads to more accurate predictions of physical systems.

In robotics, modeling sensor and actuator symmetries enables more robust control policies and transferability across different robot designs. Equivariant models also reduce the need for extensive retraining when changing hardware configurations or environments.

Computer vision, especially 3D object recognition and medical imaging, benefits greatly from equivariance in deep weight spaces. Models become rotation- and scale-invariant, improving their performance on tasks like tumor detection or scene reconstruction from different viewpoints.

Equivariance in Generative Models

Generative models like GANs and VAEs can also integrate equivariant architectures to produce more diverse and structured outputs. By constraining the latent and weight spaces using symmetries, these models generate outputs that preserve global structure and semantics.

Challenges and Future Directions

Despite their promise, equivariant architectures for deep weight spaces face several challenges. One is computational complexity. Implementing group-theoretic transformations and ensuring equivariance throughout deep networks requires substantial computational resources and mathematical precision.

Another issue is generalization. While many models excel under specific symmetry groups, designing flexible systems that can adapt to unknown or composite transformations remains an open research area. Building hybrid architectures that can learn or approximate symmetries dynamically may provide a solution.

Future research is focusing on developing modular, scalable equivariant building blocks that integrate seamlessly into existing deep learning frameworks. Efforts are also underway to apply these techniques to large language models and multimodal systems.

Equivariant Transformers and Self-Attention

Transformers are being adapted to incorporate equivariance into self-attention mechanisms. This allows attention weights to respect spatial or semantic symmetries in the data, leading to more robust and interpretable outputs.

Conclusion: The Road Ahead for Equivariant Deep Learning

Equivariant architectures for learning in deep weight spaces represent a powerful convergence of geometry, group theory, and deep learning. They offer new ways to impose meaningful structure in high-dimensional models, improving generalization, transferability, and efficiency.

As AI continues to tackle increasingly complex and data-scarce environments, symmetry-aware learning architectures will play a pivotal role. Whether in modeling physical phenomena, guiding autonomous agents, or understanding natural language, the ability to harness and encode deep structural priors through equivariance is a game-changer.

The future is bright for equivariant deep learning, with ongoing research promising breakthroughs in how machines learn, reason, and adapt. Mastering this field will be crucial for those seeking to push the boundaries of AI capability and intelligence.

What are equivariant architectures in deep learning?

Equivariant architectures are neural networks that preserve the structure of input transformations in their output. This allows the network to generalize better by learning symmetries directly.

Why is equivariance important in weight space learning?

Equivariance enables models to navigate high-dimensional weight spaces more efficiently by reducing redundancy and preserving transformation structures, leading to faster training and improved generalization.

How do group-equivariant neural networks work?

Group-equivariant neural networks use mathematical groups (like rotations or permutations) to build symmetry-aware layers that respond consistently to input transformations.

What are some real-world applications of equivariant deep learning?

Equivariant architectures are used in physics simulations, robotics control, computer vision (like pose estimation), and any domain requiring structured data interpretation under transformation.

How is equivariant learning different from invariant learning?

Equivariant learning maintains consistent output changes based on input transformations, while invariant learning aims to produce the same output regardless of how the input is transformed.