Dense Deep Learning Architecture, Benefits, and Applications

Dense deep learning is a fundamental concept in neural networks where each neuron is connected to every neuron in the previous and next layer. This blog explores the architecture, benefits, applications, and challenges of dense deep learning in today’s AI-driven world.

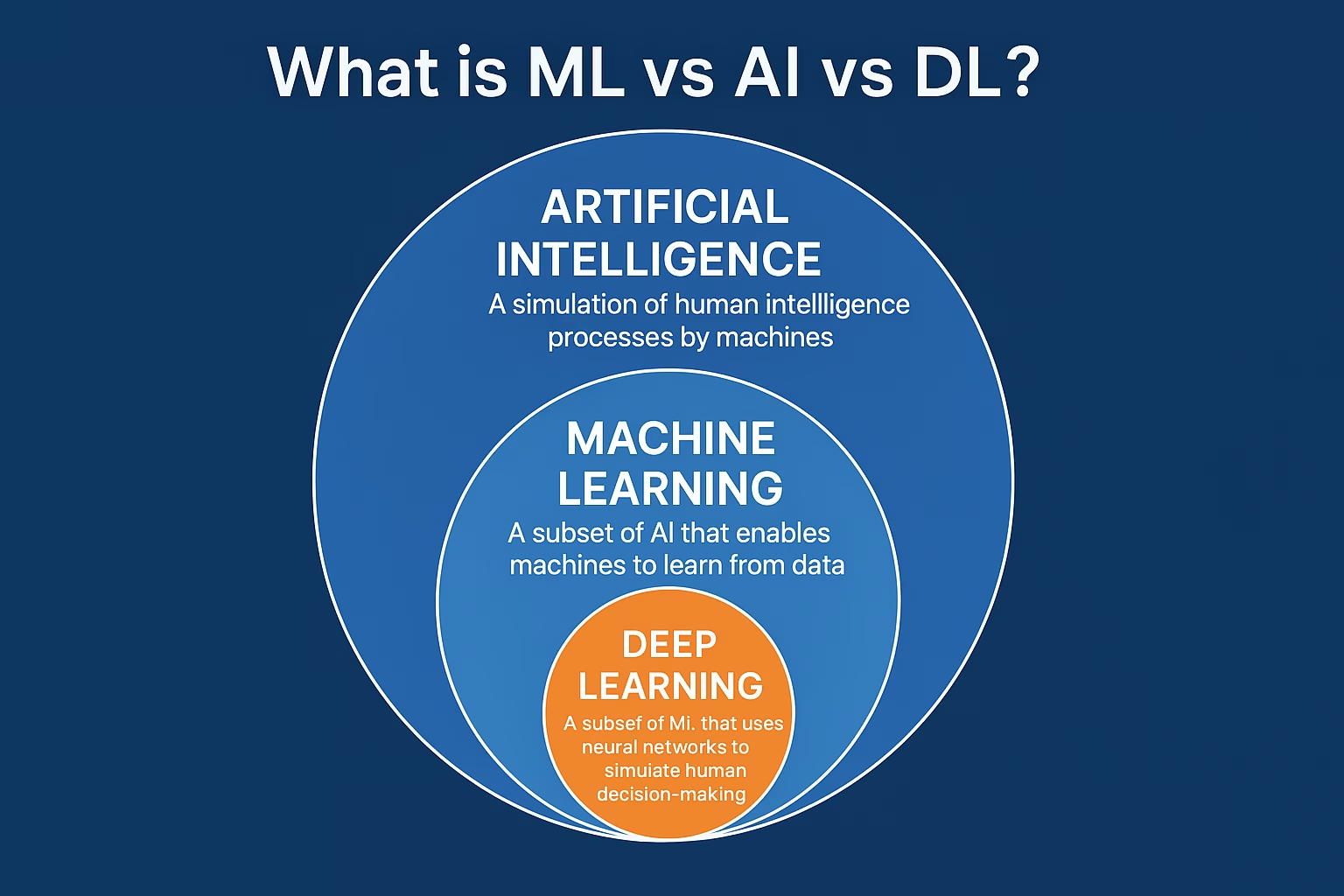

Deep learning has become the powerhouse behind modern AI applications. Among the foundational concepts in neural networks is the use of dense or fully connected layers. These layers are responsible for powerful transformations of data, enabling models to learn complex patterns in image recognition, language processing, and beyond.

In this blog, we delve into the mechanics and importance of dense deep learning. We’ll examine how dense layers operate, where they shine, their advantages, drawbacks, and their role in cutting-edge AI applications. Whether you’re a machine learning beginner or an experienced practitioner, understanding dense deep learning is crucial for building effective models.

Understanding Dense Layers in Deep Learning

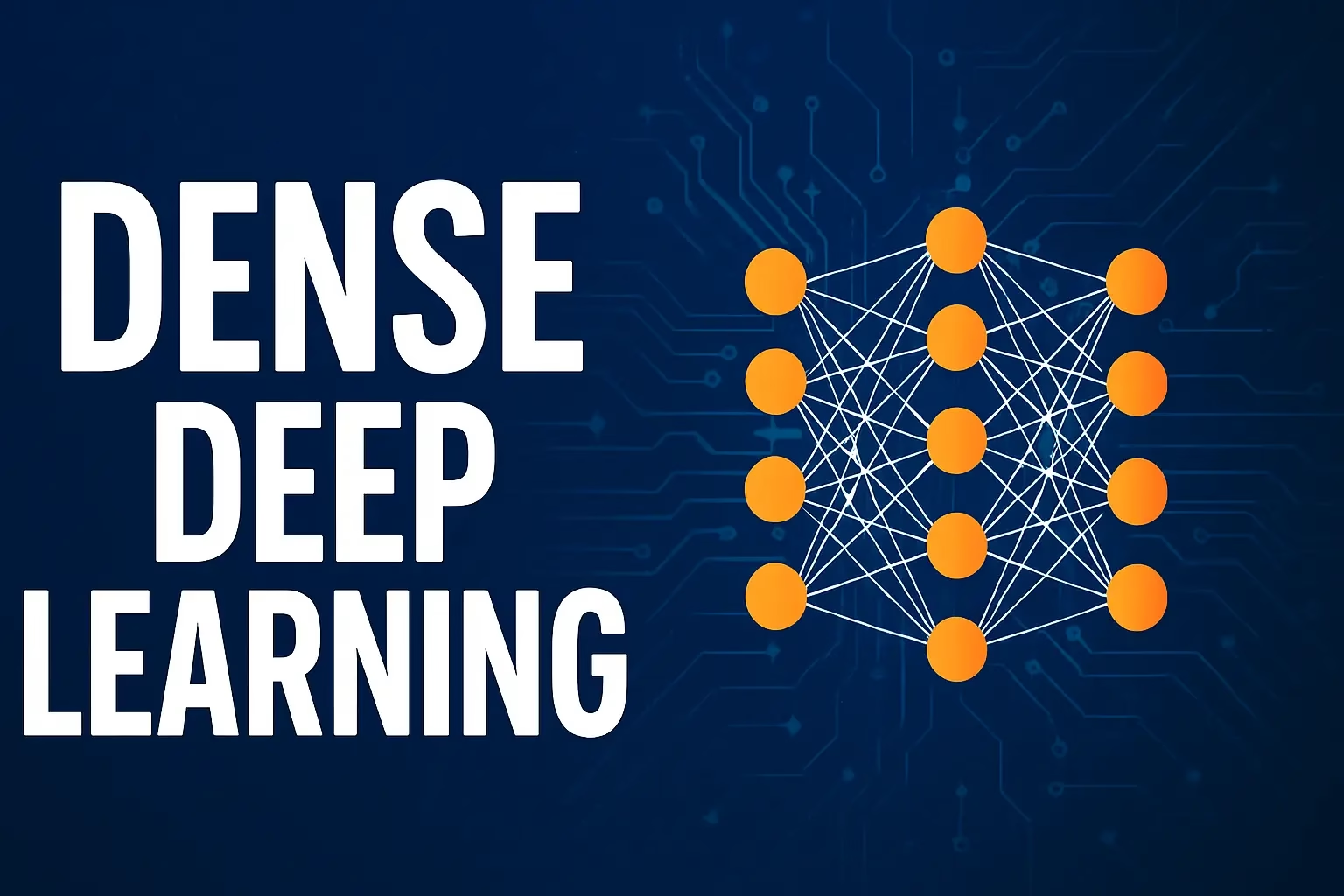

A dense layer, also known as a fully connected layer, is the basic building block of deep neural networks. In a dense layer, each neuron receives input from all neurons of the previous layer. This results in maximum interconnection, allowing for rich feature transformations.

Dense layers play a vital role in combining features and performing complex mappings from inputs to outputs. For instance, in image classification, earlier layers may detect edges and textures, while dense layers synthesize these into abstract representations like object types. This hierarchical feature representation is what makes deep learning so powerful.

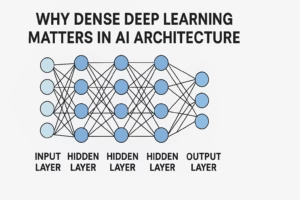

Why Dense Deep Learning Matters in AI Architecture

Dense deep learning contributes significantly to the success of various AI architectures. These layers are often found at the end of convolutional neural networks (CNNs) where they serve as the final decision-making mechanism, aggregating extracted features into class scores or regression outputs.

The strength of dense layers lies in their versatility. They can be applied to numerous domains including vision, speech, and language. Moreover, they are essential in architectures like feedforward networks, multilayer perceptrons (MLPs), and hybrid models that mix convolutional, recurrent, and attention-based mechanisms.

Key Characteristics of Dense Layers

- High parameter count due to full connectivity

- Powerful for learning global patterns

- Often used after feature extraction layers

Applications of Dense Deep Learning in Real-World Use Cases

Dense deep learning models are deployed in a wide range of real-world applications. In healthcare, they are used for disease prediction based on electronic health records or medical imaging. The dense layers help synthesize various input signals into actionable outcomes.

In financial services, dense layers analyze complex datasets for credit scoring, fraud detection, and algorithmic trading. By capturing non-linear relationships, they significantly outperform traditional statistical models. E-commerce platforms utilize them in recommendation engines by learning latent patterns from user behavior and item attributes.

Another emerging area is natural language processing (NLP). Dense layers in transformer models like BERT or GPT are essential for tasks such as sentiment analysis, machine translation, and question-answering. They help the model understand and represent the semantics of language at various levels.

Benefits of Using Dense Layers in Deep Learning Models

One of the biggest benefits of dense layers is their capacity to learn complex, non-linear relationships. With the right number of neurons and sufficient training data, they can approximate any mathematical function, making them extremely powerful for predictive modeling.

Dense layers are also easier to implement and train compared to more complex structures like convolutional or recurrent layers. Most deep learning libraries such as TensorFlow, Keras, and PyTorch offer straightforward APIs for adding dense layers, accelerating development time.[YOUTUBE]

Another advantage is transferability. Dense layers at the end of pre-trained models can be fine-tuned to adapt to new tasks or datasets, making them crucial for transfer learning. This technique allows developers to leverage powerful pre-trained backbones and build custom applications efficiently.

Dense Layer Benefits Summary

- Universal function approximator

- Simple and flexible architecture

- Essential for final decision layers in models

Challenges and Limitations of Dense Deep Learning

Despite their power, dense layers come with certain drawbacks. The primary issue is scalability. Because each neuron is connected to all neurons in the previous layer, the number of parameters can grow rapidly. This leads to increased memory usage and training time.

Dense deep learning models are also prone to overfitting, especially when dealing with small datasets. Without proper regularization techniques like dropout, weight decay, or early stopping, these models may perform well on training data but fail to generalize.

Moreover, dense layers are not always ideal for handling structured spatial data like images or sequences. Specialized layers such as convolutions (for images) or recurrent units (for time-series) often outperform dense layers in these contexts due to their ability to capture local patterns.

Common Solutions to Dense Layer Limitations

- Use of dropout and batch normalization

- Model pruning and compression

- Layer hybridization with convolutional or attention layers

Future Trends in Dense Deep Learning

Dense deep learning is continuously evolving. One exciting trend is the integration of dense layers with transformer-based models. While transformers rely heavily on self-attention, dense layers still play a crucial role in output generation and classification.

Model efficiency is another frontier. Researchers are working on quantized and sparse dense layers that maintain performance while reducing memory and compute requirements. This is especially important for deploying models on edge devices and mobile platforms.

AutoML tools are also optimizing the structure of dense layers. These tools use algorithms to automatically design neural networks, including the number and size of dense layers, reducing the need for manual tuning and boosting productivity for machine learning practitioners.

Future Enhancements and Innovations

- Integration with attention mechanisms

- Advances in low-rank approximations

- Smarter architectural search using AutoML

Conclusion: Harnessing Dense Deep Learning for Intelligent Systems

Dense deep learning continues to be a cornerstone of neural network design. Its ability to learn complex relationships, adaptability to various domains, and integration into larger architectures makes it invaluable for developers and researchers alike.

While challenges exist—such as overfitting and scalability—modern solutions like dropout, pruning, and hybrid architectures are addressing these limitations. As AI progresses, dense layers will remain essential, evolving with new technologies and use cases.

By understanding and leveraging dense deep learning, practitioners can build more accurate, robust, and efficient AI models that solve real-world problems across industries. Whether in finance, healthcare, e-commerce, or NLP, the power of fully connected layers continues to shape the future of machine learning.