Deep Learning Memory Optimization: Maximizing AI Performance

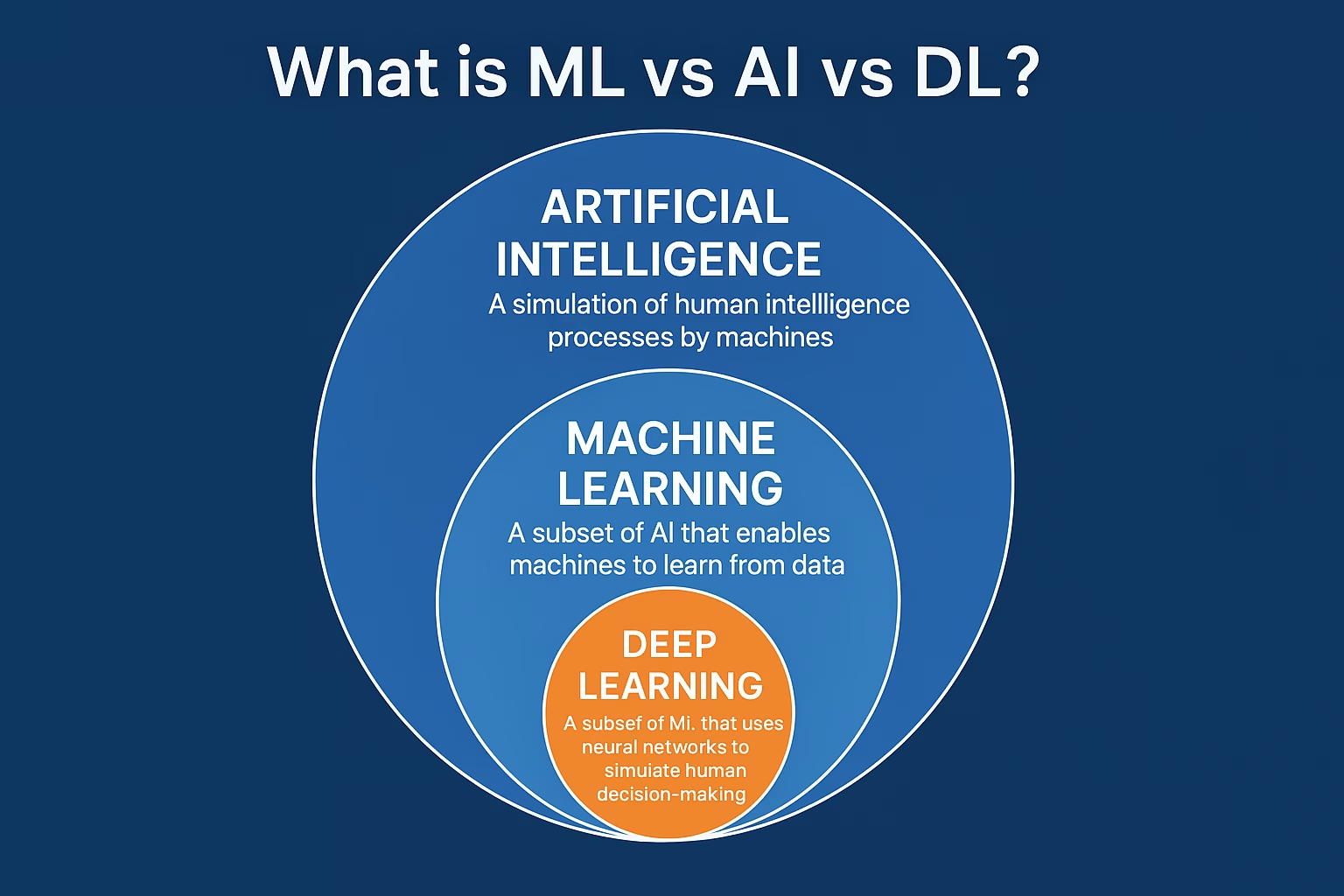

Introduction: Deep learning memory optimization is crucial for enhancing model performance, minimizing computational costs, and enabling deployment on resource-constrained environments. With the ever-increasing size of neural networks, efficient memory management ensures scalability and speed in AI development. This article explores key strategies, tools, and innovations in deep learning memory optimization that help developers push the limits of artificial intelligence.

As deep learning models grow more complex, managing memory becomes a bottleneck that can hinder productivity and performance. From GPUs to TPUs, understanding and applying memory optimization techniques ensures that your deep learning projects remain fast, efficient, and cost-effective. Below, we dive into the best practices, tools, and theoretical foundations of deep learning memory optimization.

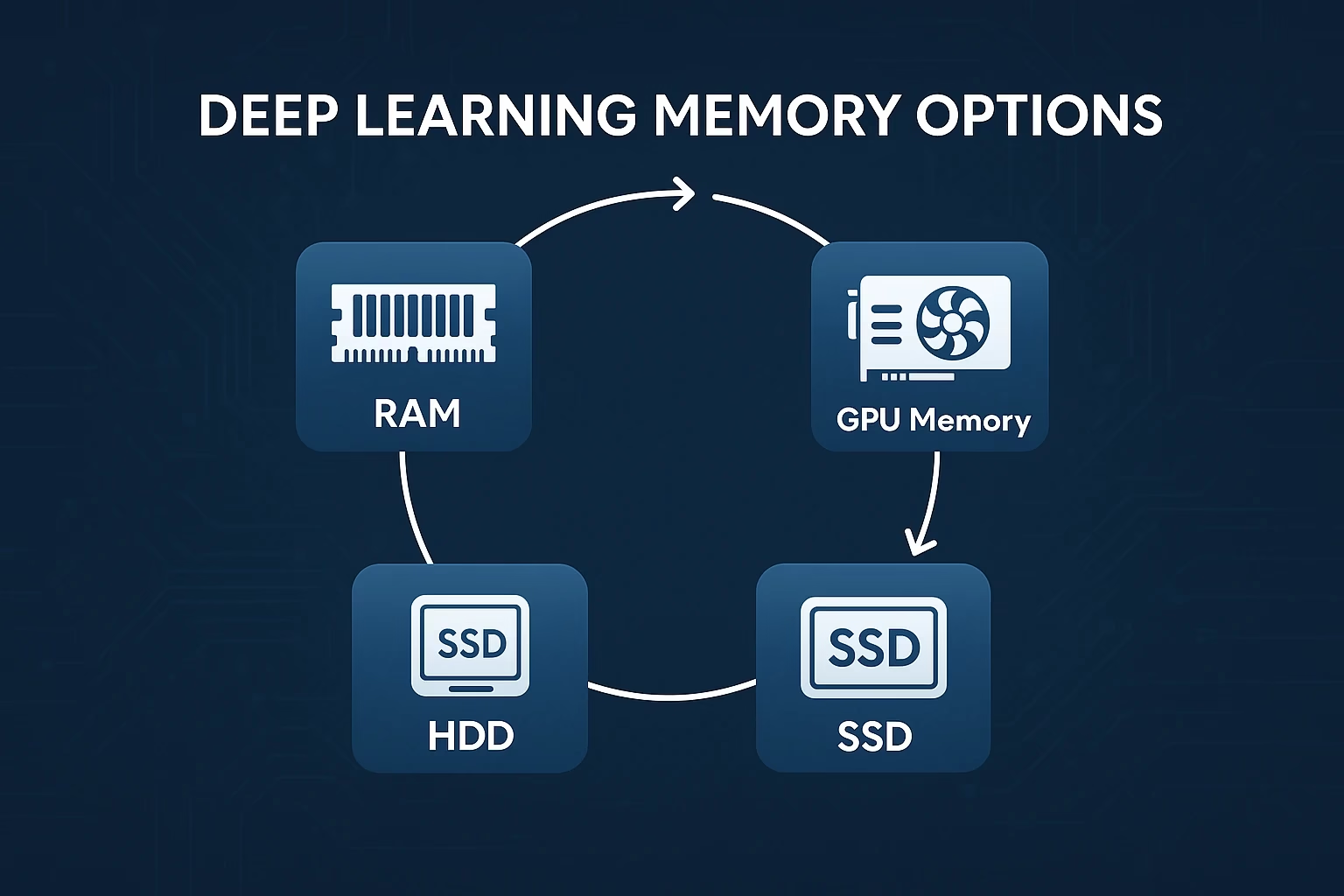

Understanding Deep Learning Memory Architecture

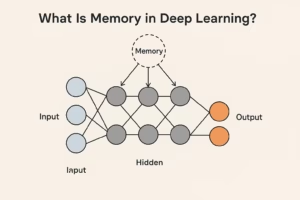

What Is Deep learning memory ?

Memory in deep learning refers to the storage used to hold model parameters, gradients, activations, and temporary data during training or inference. Most models rely on GPUs with limited VRAM, making it essential to use memory wisely. The size of tensors, batch sizes, and model layers significantly affect memory consumption.

Why Optimization Matters

Optimizing memory usage is not just about faster processing—it enables training larger models, reduces cloud costs, and allows deployment on edge devices like smartphones or IoT systems. Efficient memory use ensures that your hardware is being utilized to its full potential without crashing due to out-of-memory (OOM) errors.

Key Techniques for Deep Learning Memory Optimization

Gradient Checkpointing

Gradient checkpointing trades compute for memory by saving only a subset of intermediate activations and recomputing them during the backward pass. This can reduce memory consumption by up to 50%, especially in deep transformer-based architectures like BERT or GPT.

Mixed Precision Training

Mixed precision training uses a combination of 16-bit (FP16) and 32-bit (FP32) floating-point types. By storing weights and activations in FP16 and performing critical operations in FP32, this technique can drastically reduce memory usage while maintaining model accuracy. NVIDIA’s Apex and PyTorch AMP make this easier to implement.

Framework-Specific Memory Optimization Tools

TensorFlow Memory Management

TensorFlow provides memory growth settings to prevent it from pre-allocating all GPU memory. Using `tf.config.experimental.set_memory_growth` allows your model to only use as much GPU memory as it needs. TensorFlow also includes XLA (Accelerated Linear Algebra), which compiles subgraphs to optimize memory and speed.

PyTorch Memory Tools

PyTorch offers `torch.cuda.empty_cache()` to free unused memory, as well as built-in memory profiler tools such as `torch.utils.bottleneck` and `torch.profiler`. These tools give insights into GPU usage, helping developers find and fix memory leaks or inefficiencies.

JAX and XLA Compilation

JAX, developed by Google, is known for its functional programming style and performance. It uses XLA compilation to fuse operations and minimize memory use. JAX is particularly good at optimizing model training loops at a low level.

Hardware-Aware Memory Optimization Strategies

Using Efficient Batch Sizes

Batch size affects memory consumption dramatically. A larger batch size uses more memory but can improve gradient estimates. Memory optimization often involves finding the sweet spot where performance and memory use are balanced. Adaptive batch sizing is a technique that changes the batch size during training to prevent OOM errors.

Tensor Slicing and Chunking

Tensor slicing involves splitting large tensors into smaller chunks that are processed sequentially. This is especially useful when handling high-resolution images or 3D data in medical imaging, where tensor sizes can be massive.

Offloading and Multi-GPU Techniques

Some frameworks allow offloading certain computations to the CPU to reduce GPU memory usage. Multi-GPU training (using techniques like model parallelism or data parallelism) distributes memory load across devices, enabling the training of massive models without hitting memory limits.

Memory Optimization in Transformer Models

Attention Mechanism Efficiency

Transformers like BERT and GPT are memory-intensive due to the self-attention mechanism. Techniques like sparse attention, Linformer, and Performer approximate attention scores to reduce memory complexity from quadratic to linear or sublinear.

Layer Freezing and Pruning

During fine-tuning, freezing early layers of a transformer reduces memory and compute requirements. Pruning removes less important weights or neurons, shrinking the model’s memory footprint while maintaining performance.

Quantization of Models

Quantization reduces memory by representing weights and activations with fewer bits (e.g., 8-bit integers instead of 32-bit floats). This is especially useful for deploying models on mobile or embedded devices where memory is at a premium.

Best Practices for Long-Term Memory Optimization

Profiling Your Model Early

Use memory profilers early in the model development cycle to catch inefficient layers or data structures. Tools like NVIDIA Nsight Systems, PyTorch Profiler, and TensorFlow Profiler provide detailed insights into memory usage per operation.

Data Pipeline Optimization

Efficient input pipelines reduce memory spikes. Prefetching, caching, and data compression (like using JPEGs instead of raw images) reduce the memory load on both the GPU and the CPU. Consider using efficient data formats like TFRecords or LMDBs.

Model Architecture Design

Choosing an efficient model architecture from the start can save significant memory. Lightweight architectures like MobileNet, EfficientNet, or DistilBERT are designed for high performance with low memory usage, ideal for mobile or real-time applications.

Frequently Asked Questions (FAQs)

1. What causes out-of-memory (OOM) errors in deep learning?

OOM errors occur when the model, data, or batch size exceeds the available GPU or CPU memory. It’s often caused by unoptimized architectures, large batch sizes, or inefficient memory allocation during training.

2. How do I know how much memory my model uses?

You can use profiling tools like PyTorch Profiler, TensorBoard, or NVIDIA Nsight to monitor and analyze memory usage during training and inference.

3. Can memory optimization reduce training time?

Yes, optimizing memory usage can indirectly reduce training time by preventing crashes, enabling larger batch sizes, and improving hardware utilization.

4. Is mixed precision training safe for all models?

Most modern models work well with mixed precision training, but edge cases may suffer from instability or loss of accuracy. Always monitor training metrics to ensure model performance isn’t compromised.

5. Should I always use the largest batch size that fits in memory?

Not necessarily. While larger batches can speed up training, they may lead to poorer generalization. It’s important to tune batch size for both performance and accuracy, not just memory usage.