AI Safety Ethics: Principles, Challenges, and Best Practices

AI safety ethics is a rapidly evolving discipline that focuses on developing and deploying artificial intelligence in a manner that is ethical, transparent, and beneficial to society. As AI systems become more autonomous and powerful, ensuring their safety and aligning their behavior with human values is essential for long-term sustainability and trust.

With AI influencing areas such as healthcare, transportation, law enforcement, and finance, the importance of ethical safeguards has never been greater. Policymakers, developers, and ethicists are working together to build frameworks that reduce risks while promoting innovation. This comprehensive guide explores the foundational concepts, ongoing challenges, and actionable practices in the realm of AI safety ethics.

Understanding AI Safety Ethics: Core Concepts and Relevance

AI safety ethics combines technical disciplines with moral philosophy to address how intelligent systems should behave and how humans should control them. It encompasses questions like: Should an AI prioritize efficiency over fairness? Who is responsible if an AI causes harm? And how do we ensure AI doesn’t act against human interests, especially when it becomes more autonomous?

The relevance of AI safety ethics lies in its potential to prevent unintended consequences. From biased decision-making in judicial systems to lethal autonomous weapons, the stakes are high. Ethical AI design is not just about avoiding malfunctions but about actively guiding AI development toward outcomes that align with democratic and humanitarian values.

Ethical Challenges in AI Development and Deployment

One of the most pressing issues in AI safety ethics is algorithmic bias. Machine learning models often replicate societal biases present in their training data, leading to discriminatory outcomes in hiring, lending, or policing. Ethical AI design must focus on auditing and correcting these imbalances to avoid perpetuating inequality.

Transparency and explainability are also crucial. As black-box models become more complex, understanding how and why AI systems reach their decisions becomes difficult. This lack of interpretability undermines trust and makes accountability nearly impossible. Ethical standards must push for explainable AI (XAI) systems, especially in high-stakes applications.

Autonomy and Control

As AI becomes more capable of making decisions without human intervention, questions about control and responsibility arise. Ensuring humans remain “in-the-loop” is essential for ethical governance. The possibility of losing control over AI systems is a significant existential risk that must be mitigated through careful oversight.

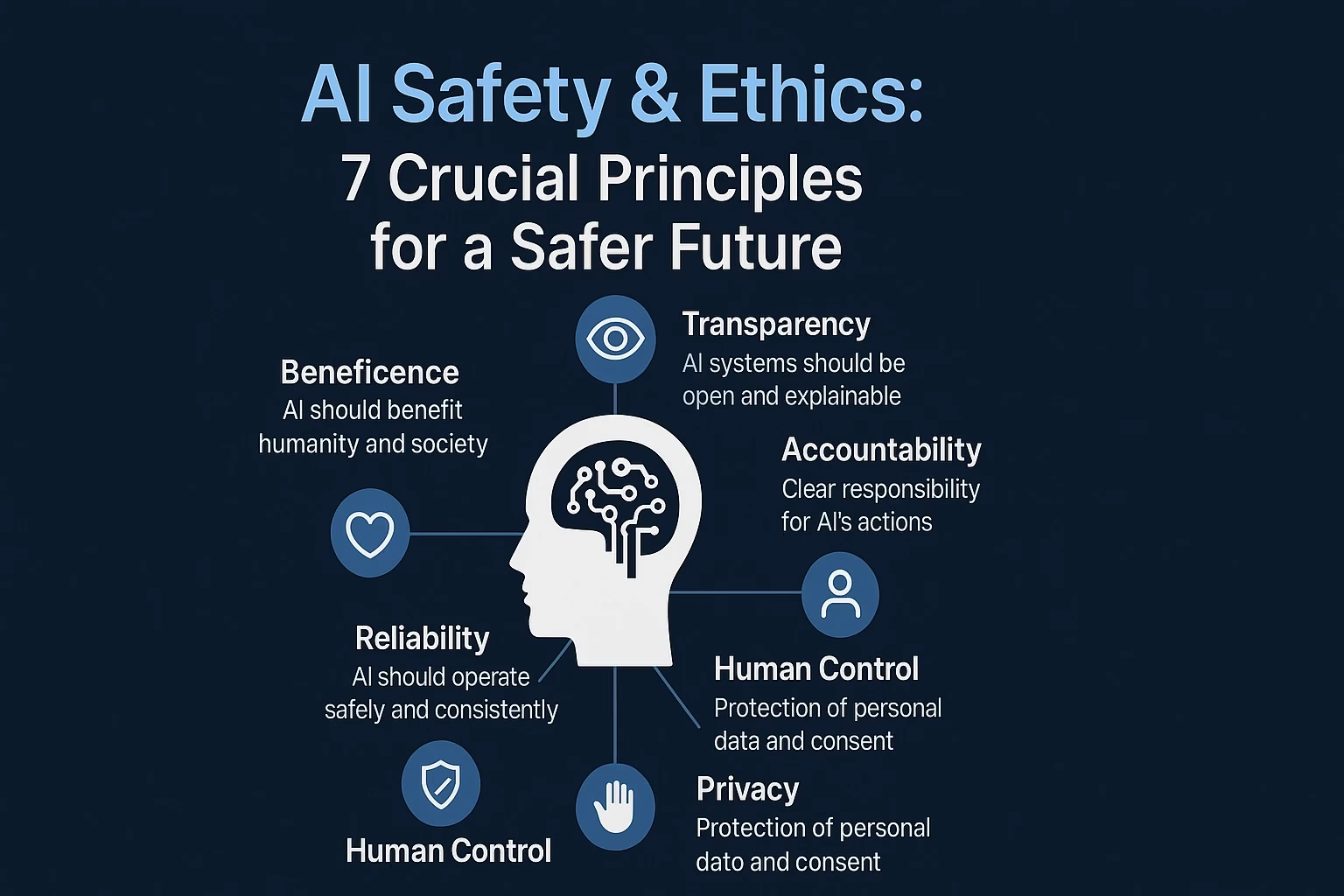

Frameworks and Principles Guiding AI Ethics

Several international organizations have published ethical principles for AI, such as the OECD, UNESCO, and the EU. Common themes include fairness, accountability, transparency, human-centered values, and sustainability. These frameworks serve as foundational guides for AI governance and regulation.

For instance, the European Union’s AI Act classifies AI systems based on their risk levels and mandates strict oversight for high-risk applications like facial recognition or medical diagnostics. This risk-based approach aims to balance innovation with fundamental rights protections.

Ethics by Design

Integrating ethics into the design phase of AI systems ensures that safety isn’t an afterthought. Developers should incorporate ethical considerations into data selection, model architecture, and decision logic. This proactive approach is known as “ethics by design” and is essential for creating responsible AI technologies.

Mitigating Risks and Ensuring AI Safety

Risk mitigation in AI involves both technical safeguards and policy interventions. Technical strategies include robustness testing, adversarial training, and anomaly detection to ensure the AI behaves as intended in diverse conditions. These tools are vital for preventing accidents and adversarial exploitation.

On the policy side, institutions must enforce clear accountability frameworks. When harm occurs, tracing the decision path and identifying liable entities becomes essential. Regulatory bodies can enforce standards and penalties to encourage safer practices across industries using AI.

Human Oversight and Auditability

Embedding human oversight into AI systems ensures a balance between automation and accountability. Regular audits, both internal and external, help verify compliance with ethical standards. Transparency reports and algorithmic impact assessments can provide further insights into AI behavior and safety risks.

Future of AI Safety Ethics: Global Collaboration and Innovation

The future of AI safety ethics depends on global cooperation. AI does not recognize national borders, and neither do its risks. International treaties, cross-border research, and multi-stakeholder forums are necessary to align ethical norms across cultures and governments.

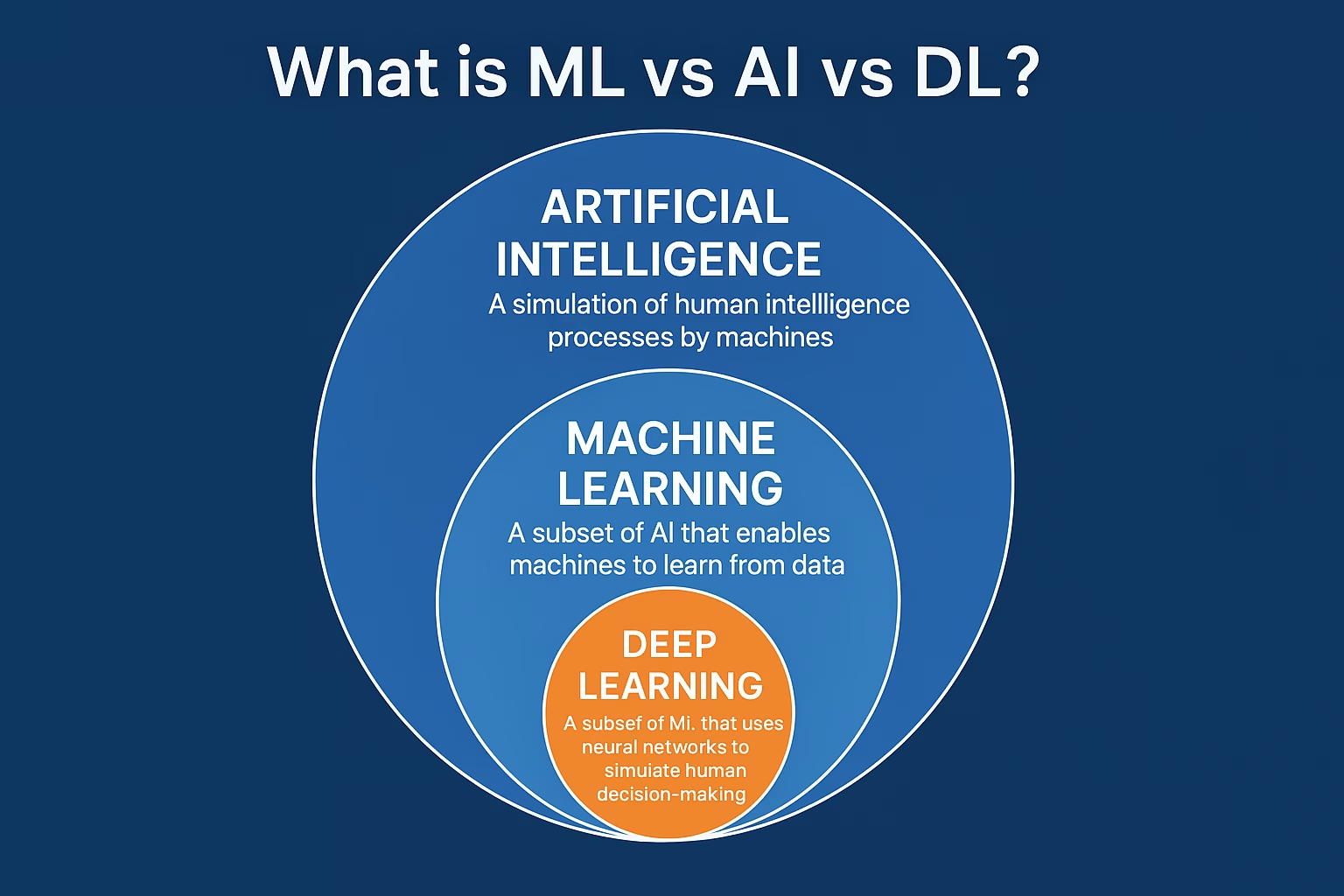

Innovations like neurosymbolic AI causal inference models, and quantum machine learning introduce new layers of complexity and ethical considerations. Continuous research into alignment, interpretability, and safe AGI development will shape the next decade of AI safety.

Education and Public Awareness

Educating developers, policymakers, and the public about AI safety ethics is essential for responsible adoption. As awareness grows, so does the pressure on companies and governments to implement ethical safeguards. A well-informed society is a powerful tool in shaping the future of AI development.

FAQs About AI Safety Ethics

What is AI safety ethics?

AI safety ethics is the study and practice of ensuring that artificial intelligence systems operate safely, ethically, and in alignment with human values. It involves developing frameworks to prevent harm and promote fairness, transparency, and accountability.

Why is AI ethics important?

AI ethics is important because AI systems can significantly impact human lives. Ethical oversight ensures that these technologies do not perpetuate bias, infringe on rights, or cause unintended harm, especially as AI becomes more autonomous and pervasive.

How can developers ensure ethical AI?

Developers can ensure ethical AI by adopting best practices like fairness audits, explainable AI models, inclusive data collection, and by following established ethical guidelines such as those by the EU, OECD, or IEEE.

What are some common ethical issues in AI?

Common ethical issues in AI include algorithmic bias, lack of transparency, data privacy violations, misuse in surveillance, and loss of accountability. Addressing these challenges is essential for trust and safety in AI applications.

How is AI safety regulated?

AI safety is regulated through national laws (e.g., the EU AI Act), industry standards, and global frameworks. These regulations aim to classify AI systems by risk, enforce transparency, and hold entities accountable for unethical use or failures.