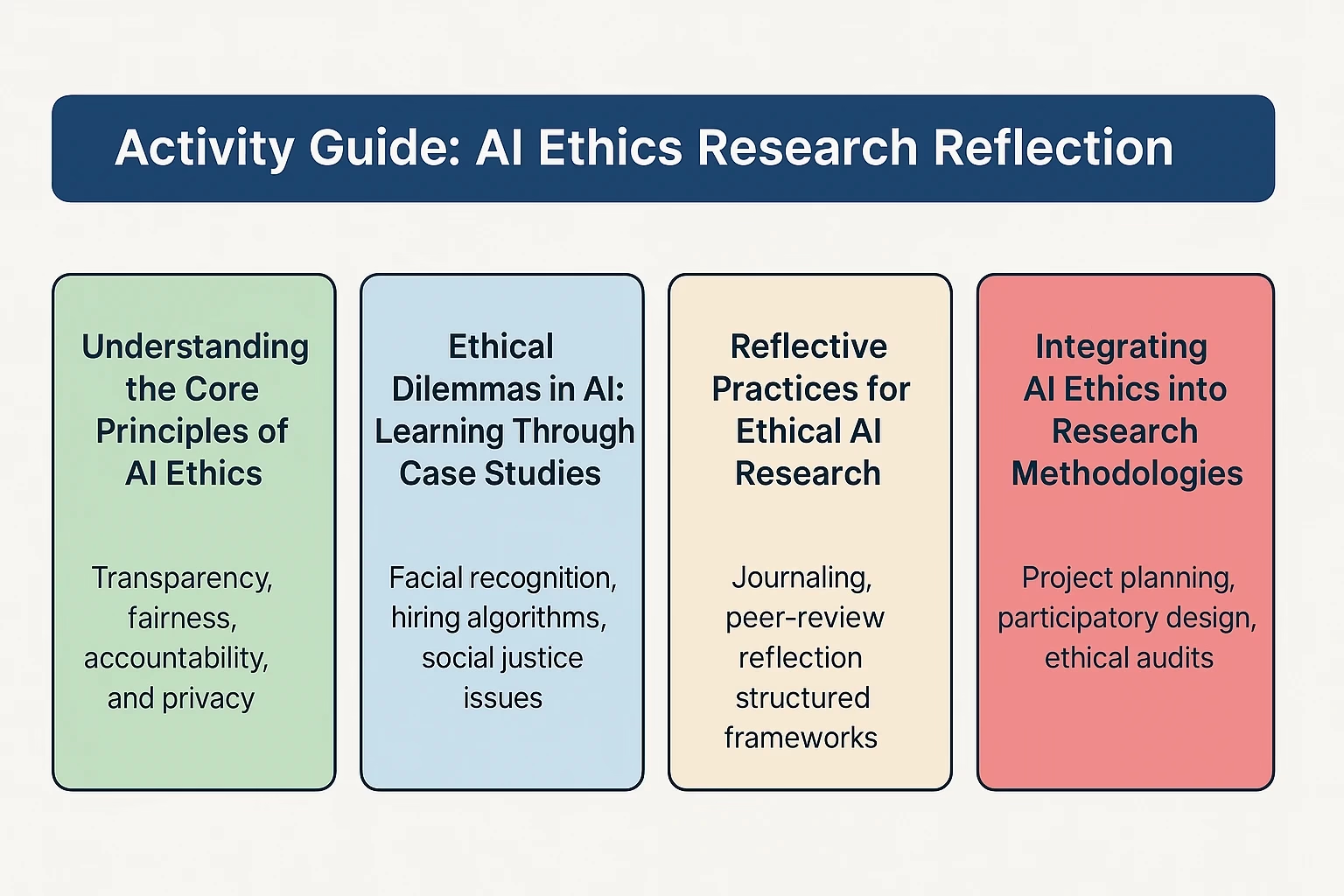

Activity Guide AI Ethics Research Reflection

Understanding and reflecting on artificial intelligence’s ethical implications is crucial in shaping responsible AI systems. This activity guide on AI ethics research reflection offers a comprehensive path to explore ethical challenges, analyze case studies, and develop a deep understanding of responsible AI development practices.

As AI systems become more integrated into society, the need for ethical frameworks, transparency, and accountability grows stronger. This guide is designed to help students, researchers, and professionals alike to critically examine the moral implications of AI innovations through structured activities and reflective insights.

Through a combination of practical activities, research evaluation, and reflection prompts, this article delivers a powerful learning journey into AI ethics, grounded in real-world issues and future-focused thinking.

Understanding the Core Principles of AI Ethics

To meaningfully engage in AI ethics research, one must begin by grasping the fundamental principles guiding ethical AI development. These include transparency, fairness, accountability, privacy, and the avoidance of harm. Each of these values underpins the responsible deployment of AI technologies across industries.

Transparency ensures that AI decision-making processes can be audited and understood. Fairness emphasizes the need to prevent bias in training data or model outputs. Accountability holds developers and organizations responsible for their systems’ actions, while privacy focuses on protecting individuals’ data rights. These pillars are not just theoretical—they frame the foundation for practical decision-making in AI projects.

Ethical Dilemmas in AI: Learning Through Case Studies

Exploring real-world case studies is an essential method for developing a strong ethical understanding. A popular case involves facial recognition technology and its use by law enforcement. While these tools can enhance security, they also raise serious concerns around surveillance, racial profiling, and consent.

Another powerful example is the use of AI in hiring platforms. Algorithms designed to screen candidates have been found to inherit historical biases, disadvantaging certain groups based on gender, ethnicity, or socioeconomic background. These dilemmas reveal the deep intersection between AI and social justice, urging researchers to ask not just what AI can do, but what it should do.[YOUTUBE]

Interactive Activity: Ethical Case Evaluation

Select a recent AI deployment (e.g., ChatGPT, autonomous vehicles, AI in healthcare) and analyze its ethical implications using the core principles discussed above. Document concerns, risks, and your personal stance on its responsible use. Present your reflections in a group discussion or journal entry.

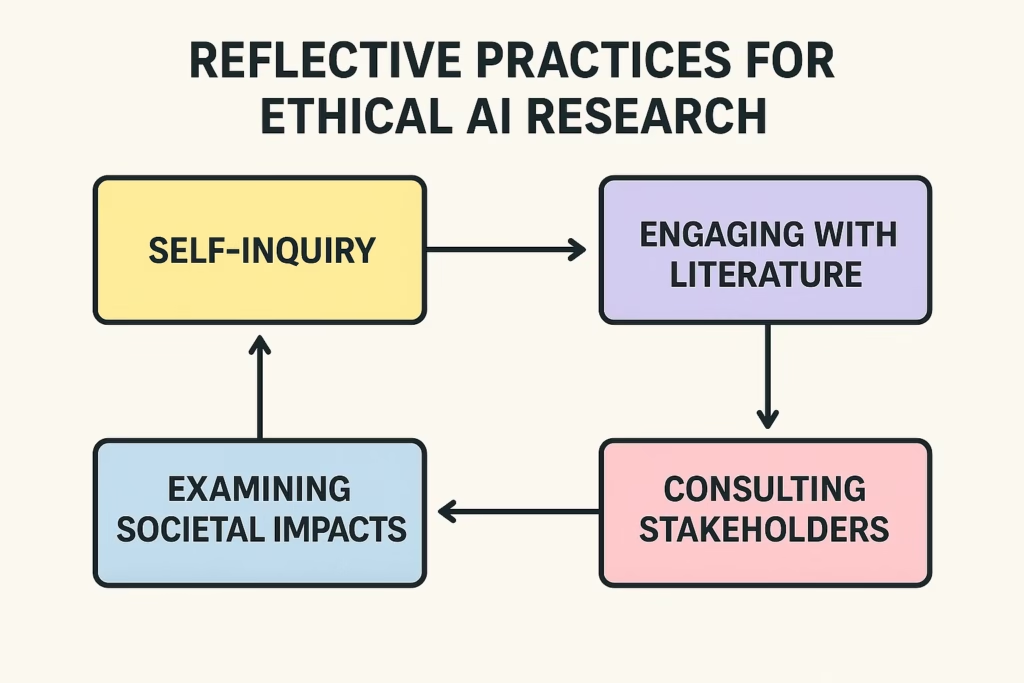

Reflective Practices for Ethical AI Research

Reflection is a crucial part of ethical research, enabling continuous learning and critical self-assessment. One powerful method is journaling after AI development sessions. Reflective journals encourage researchers to record decisions, ethical challenges faced, and emotional responses during their work.

Peer-review reflection is another useful strategy. Collaborators can provide ethical critiques of each other’s research, helping to identify blind spots or biases. These discussions promote accountability and a culture of ethical awareness within AI teams.

Finally, structured frameworks like Gibbs’ Reflective Cycle or the DIEP model (Describe, Interpret, Evaluate, Plan) can guide individuals through consistent and constructive reflections, ensuring ethical reasoning remains central in their research journey.

Reflection Prompt

“Describe a moment in your AI project when you faced an ethical decision. How did you respond, and what would you do differently today based on what you’ve learned?”

Integrating AI Ethics into Research Methodologies

Responsible AI development is not just about responding to ethical issues—it’s about embedding ethics throughout the research lifecycle. Ethical considerations should begin at the project planning stage, where researchers assess the social impact and potential harms of their proposed AI system.

Methods like participatory design—where end-users and stakeholders are involved in system development—can help create more ethical, inclusive AI. Data collection strategies must also be designed to avoid reinforcing systemic biases, ensuring datasets are diverse, representative, and consent-based.

Ethical audits during the testing and deployment phases help verify that the system continues to behave in line with ethical expectations. These audits might assess fairness metrics, algorithmic transparency, and the potential for unintended consequences.

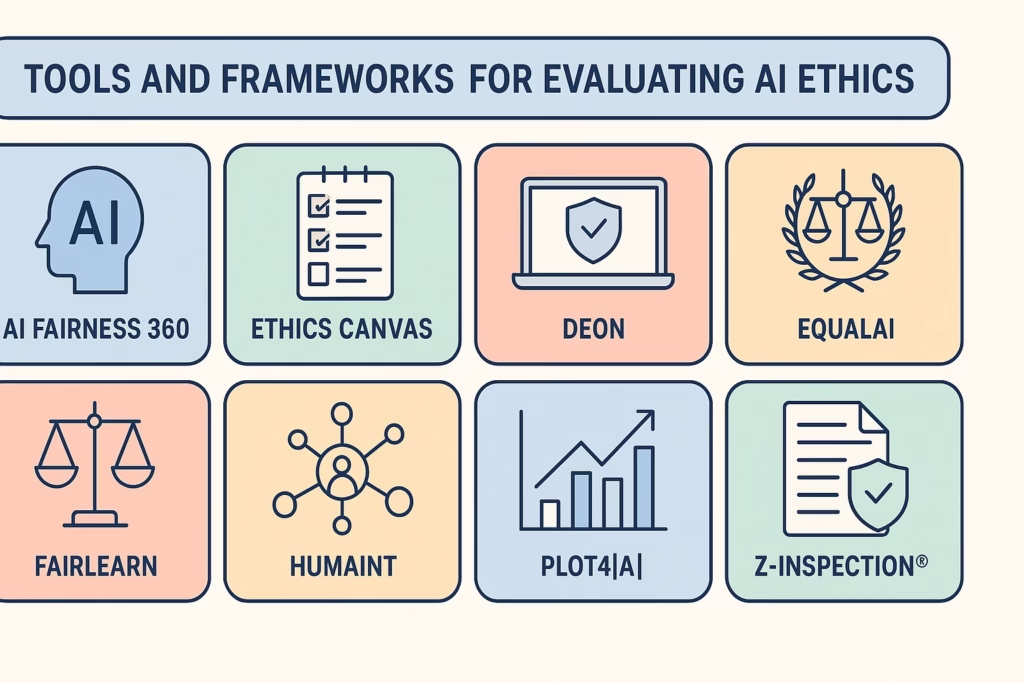

Tools and Frameworks for Evaluating AI Ethics

Several frameworks and tools are available to support AI ethics evaluation. One notable example is the “Ethics Guidelines for Trustworthy AI” by the European Commission, which outlines seven key requirements including human agency, privacy, and robustness.

The AI Now Institute also provides a practical checklist for AI developers, focusing on bias evaluation, explainability, and human oversight. Additionally, tools like IBM’s AI Fairness 360 or Google’s What-If Tool offer algorithmic testing environments to analyze model bias and outcomes.

Integrating these tools into your workflow not only improves ethical compliance but also builds user trust and long-term sustainability of AI applications.

Building a Culture of Ethics in AI Research Labs

Creating an ethical AI culture requires intentional leadership and everyday practices. Lab leaders can set the tone by prioritizing ethics during team meetings, allocating time for discussion of moral dilemmas, and implementing training programs on responsible AI development.

Cross-disciplinary collaboration is another key enabler. Ethicists, sociologists, and legal experts should be involved in AI research teams to provide holistic perspectives and flag risks that developers may overlook. This fosters a more inclusive research environment where multiple viewpoints are respected and considered.

Reward systems and performance evaluations should also reflect ethical conduct, ensuring that researchers are recognized not just for speed or innovation, but for their commitment to responsible and inclusive design practices.

Group Activity: AI Ethics Charter

Work with your lab or group to create a shared AI Ethics Charter. Define your core ethical values, acceptable research practices, and a code of conduct for AI experimentation. Revisit and revise this charter periodically as technologies evolve.