Computer Vision: An In-Depth Exploration

Computer vision is a dynamic field within artificial intelligence (AI) and computer science that enables machines to interpret and understand visual information from the world. Drawing inspiration from human vision, it allows computers to analyze images and videos to identify objects, recognize patterns, and make informed decisions. From unlocking smartphones with facial recognition to enabling self-driving cars to navigate roads, computer vision is increasingly integral to modern technology.

Foundations of Computer Vision

Human Vision vs. Computer Vision

Human vision involves a complex biological system that includes the eyes, optic nerves, and brain. The retina captures light, converts it to neural signals, and transmits it to the brain for processing. The brain interprets these signals to form a coherent understanding of the environment.

Computer vision, in contrast, relies on digital images and algorithms. It uses mathematical models to process and analyze pixels in images. While human vision is intuitive and evolved, computer vision requires programmed logic and training on data to interpret visuals.

Historical Context

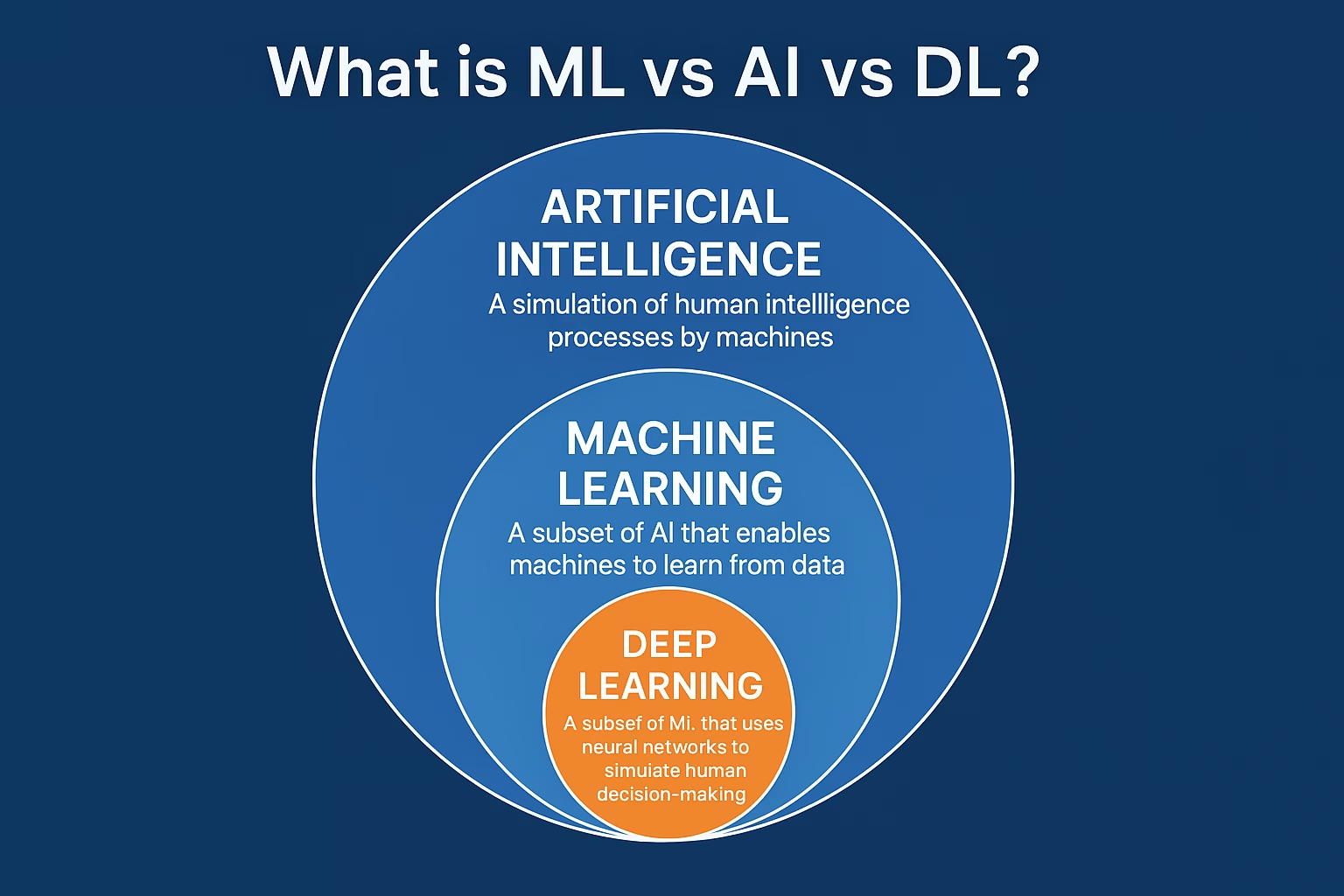

Computer vision began as a subfield of AI in the 1960s. Early projects focused on basic tasks like edge detection and pattern recognition. By the 1980s and 1990s, the field expanded with advancements in image processing and machine learning. The introduction of deep learning in the 2010s revolutionized computer vision, enabling machines to achieve human-level accuracy in many tasks.

Image Representation and Processing

Pixels and Color Models

Digital images are composed of pixels, each representing a color value. The most common color model is RGB (Red, Green, Blue), where each pixel has three components. Other models include HSV (Hue, Saturation, Value) and grayscale images, which represent intensity only.

Image Preprocessing

Before analysis, images are often preprocessed to enhance features and reduce noise. Common preprocessing techniques include:

- Resizing: Adjusting image dimensions to a standard size.

- Normalization: Scaling pixel values to a consistent range.

- Filtering: Using techniques like Gaussian blur or median filters to smooth or sharpen images.

- Thresholding: Converting grayscale images to binary for edge detection or segmentation.

Core Techniques in Computer Vision

Feature Detection and Matching

Feature detection involves identifying key points or regions of interest in an image. Algorithms like SIFT (Scale-Invariant Feature Transform), SURF (Speeded Up Robust Features), and ORB (Oriented FAST and Rotated BRIEF) detect features that are invariant to scale, rotation, and lighting.

Feature matching compares these key points across images, essential for tasks like image stitching, object recognition, and 3D reconstruction.

Image Classification

Image classification assigns a label to an image based on its content. Traditional methods used hand-crafted features and classifiers like Support Vector Machines (SVMs). Deep learning, especially Convolutional Neural Networks (CNNs), has become the dominant approach, achieving remarkable accuracy in tasks like identifying animals, vehicles, or diseases in images.

Object Detection

Object detection goes beyond classification to identify and localize multiple objects within an image. It outputs bounding boxes and labels for each detected object.

Notable object detection models include:

YOLO (You Only Look Once)

- Faster R-CNN (Region-based Convolutional Neural Networks)

- SSD (Single Shot MultiBox Detector)

- Semantic and Instance Segmentation

- Segmentation involves dividing an image into meaningful regions:

- Semantic segmentation: Labels each pixel with a class (e.g., “car” or “road”).

- Instance segmentation: Differentiates between multiple objects of the same class (e.g., two separate cars).

Deep learning models like U-Net, Mask R-CNN, and Deep lab excel in these tasks.

Optical Flow and Motion Analysis

Optical flow estimates the movement of objects between video frames. It is useful in tracking, video stabilization, and action recognition. Techniques like Lucas-Kanade and Farne back methods are commonly used.

Deep Learning in Computer Vision

Deep learning has significantly advanced computer vision by enabling automatic feature learning and improved performance.

Convolutional Neural Networks (CNNs)

CNNs are the backbone of modern computer vision. They consist of layers that automatically detect patterns in images:

- Convolutional layers: Apply filters to extract features.

- Pooling layers: Downsample the image to reduce dimensionality.

- Fully connected layers: Perform classification or regression.

CNN architectures like AlexNet, VGG, ResNet, and EfficientNet have driven progress in image recognition.

Transfer Learning

Transfer learning involves using a pre-trained model and fine-tuning it for a new task. It reduces training time and improves performance, especially with limited data.

Vision Transformers (ViTs)

Transformers, originally developed for natural language processing, are now used in vision tasks. Vision Transformers process images as sequences of patches, offering competitive performance and scalability.

Applications of Computer Vision

Computer vision has diverse applications across industries.

Healthcare

- Medical Imagine: Detecting tumors, fractures, or anomalies in X-rays, MRIs, and CT scans.

- Histopathology: Analyzing biopsy samples for cancer detection.

- Ophthalmology: Diagnosing retinal diseases using fundus images.

Autonomous Vehicles

- Object detection: Identifying pedestrians, vehicles, and traffic signs.

- Lane detection: Recognizing road lanes for navigation.

- Obstacle avoidance: Detecting and responding to hazards in real time.

Retail and E-commerce

- Visual search: Finding products by uploading images.

- Shelf monitoring: Ensuring product availability and correct placement.

- Customer analytics: Analyzing shopper behavior in stores.

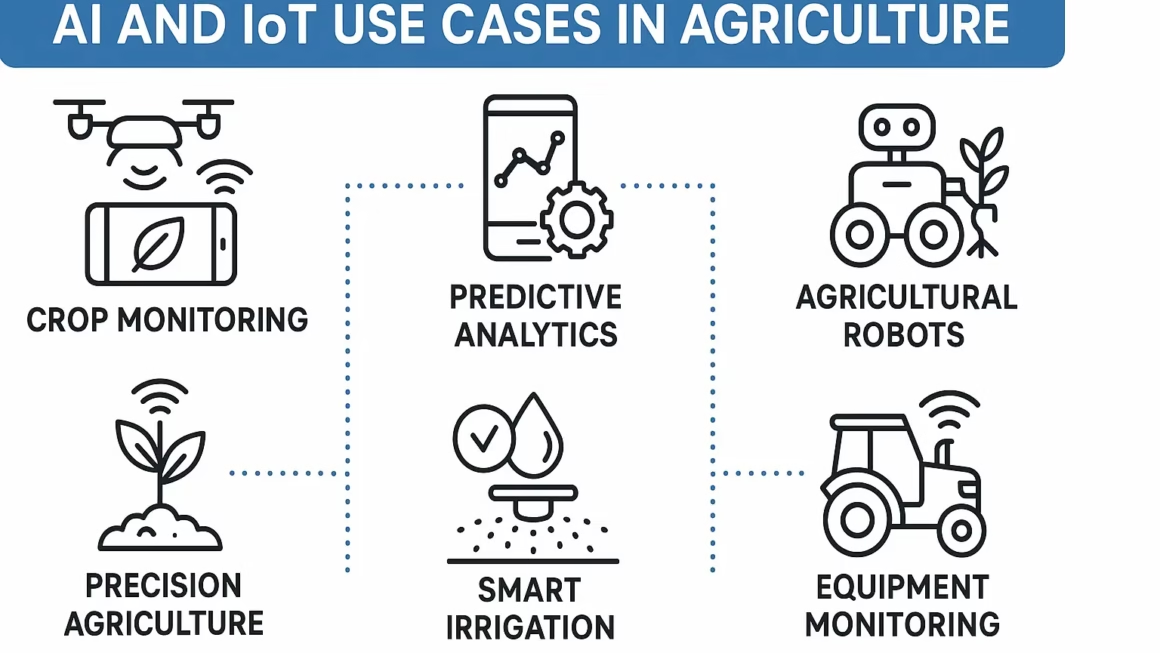

Agriculture

- Crop monitoring: Identifying diseases or nutrient deficiencies.

- Yield estimation: Predicting harvest quantities.

- Weed detection: Automating precision spraying.

Security and Surveillance

- Facial recognition: Identifying individuals for access control or criminal detection.

- Anomaly detection: Identifying suspicious behavior or objects in video feeds.

- Crowd analysis: Monitoring density and movement in public areas.

Manufacturing and Quality Control

- Defect detection: Identifying flaws in products or components.

- Automation: Guiding robotic arms for assembly or inspection.

- Process monitoring: Ensuring compliance with standards.

Sports and Entertainment

- Player tracking: Analyzing player movements and performance.

- Broadcast enhancement: Augmenting live feeds with statistics and visual effects.

- Content moderation: Detecting inappropriate content in videos and images.

Challenges in Computer Vision

Despite progress, several challenges remain:

Variability and Ambiguity

Lighting, occlusion, scale, and perspective can drastically change an image’s appearance, making consistent interpretation difficult.

Data Requirements

Deep learning models require large, diverse datasets for training. Annotating such data is expensive and time-consuming.

Real-Time Performance

Many applications, like autonomous driving or surveillance, demand real-time processing. Optimizing models for speed and efficiency is crucial.

Generalization

Models trained on specific datasets may not perform well in new environments. Ensuring robust generalization remains a key research goal.

Ethical and Privacy Concerns

Facial recognition and surveillance raise significant ethical issues. Ensuring responsible use, data privacy, and fairness is essential.

Tools and Frameworks

Popular tools and frameworks used in computer vision include:

- OpenCV: An open-source library for real-time image processing.

- TensorFlow and PyTorch: Leading deep learning frameworks.

- Detectron2: A PyTorch-based framework for object detection and segmentation.

- KerasCV: High-level tools for building computer vision models.

Future Directions

The future of computer vision is promising, with several exciting trends:

Multimodal Learning

Combining vision with text, audio, or other data types enhances understanding. Examples include image captioning and visual question answering.

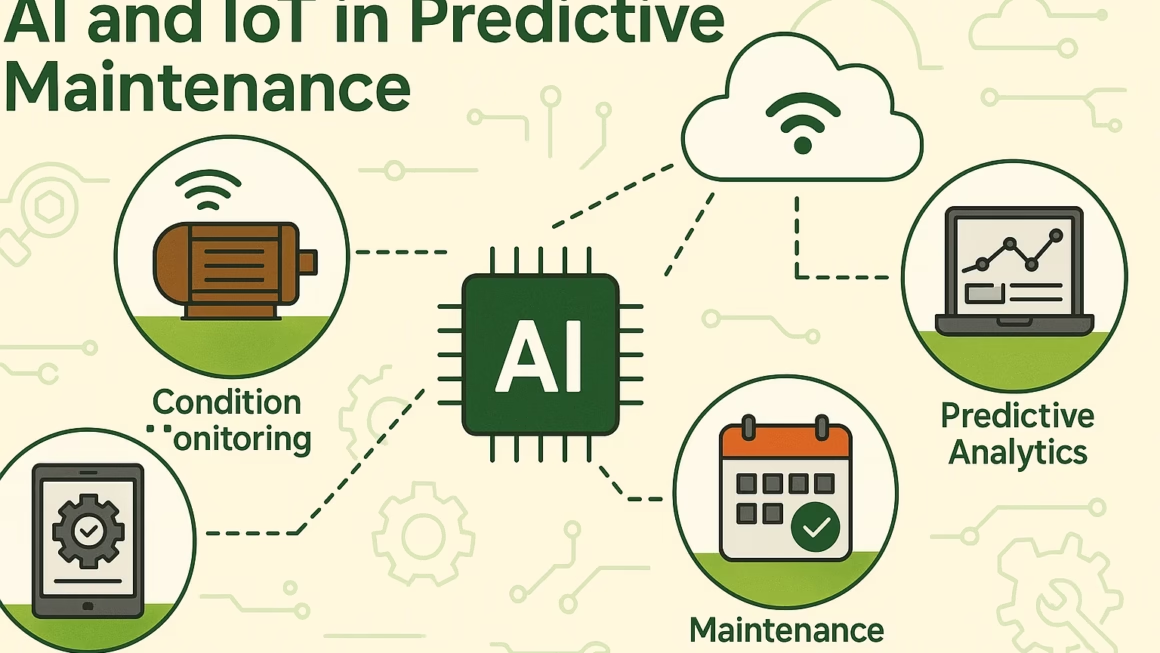

Edge AI

Deploying vision models on edge devices like smartphones or IoT cameras allows for real-time, low-latency processing without cloud dependence.

3D Computer Vision

Understanding depth and 3D structure using stereo vision, LiDAR, or monocular cues enables applications like AR/VR, robotics, and 3D modeling.

Explainability and Fairness

Improving the transparency of AI models and addressing biases is crucial for trustworthy and ethical AI deployment.

Generative Models

Generative models like GANs (Generative Adversarial Networks) and diffusion models enable image synthesis, super-resolution, and image-to-image translation.

Conclusion

Computer vision is a transformative technology that bridges the gap between digital systems and the physical

One thought on “Unlocking Innovation with Computer Vision”