Computer Vision Regression Label

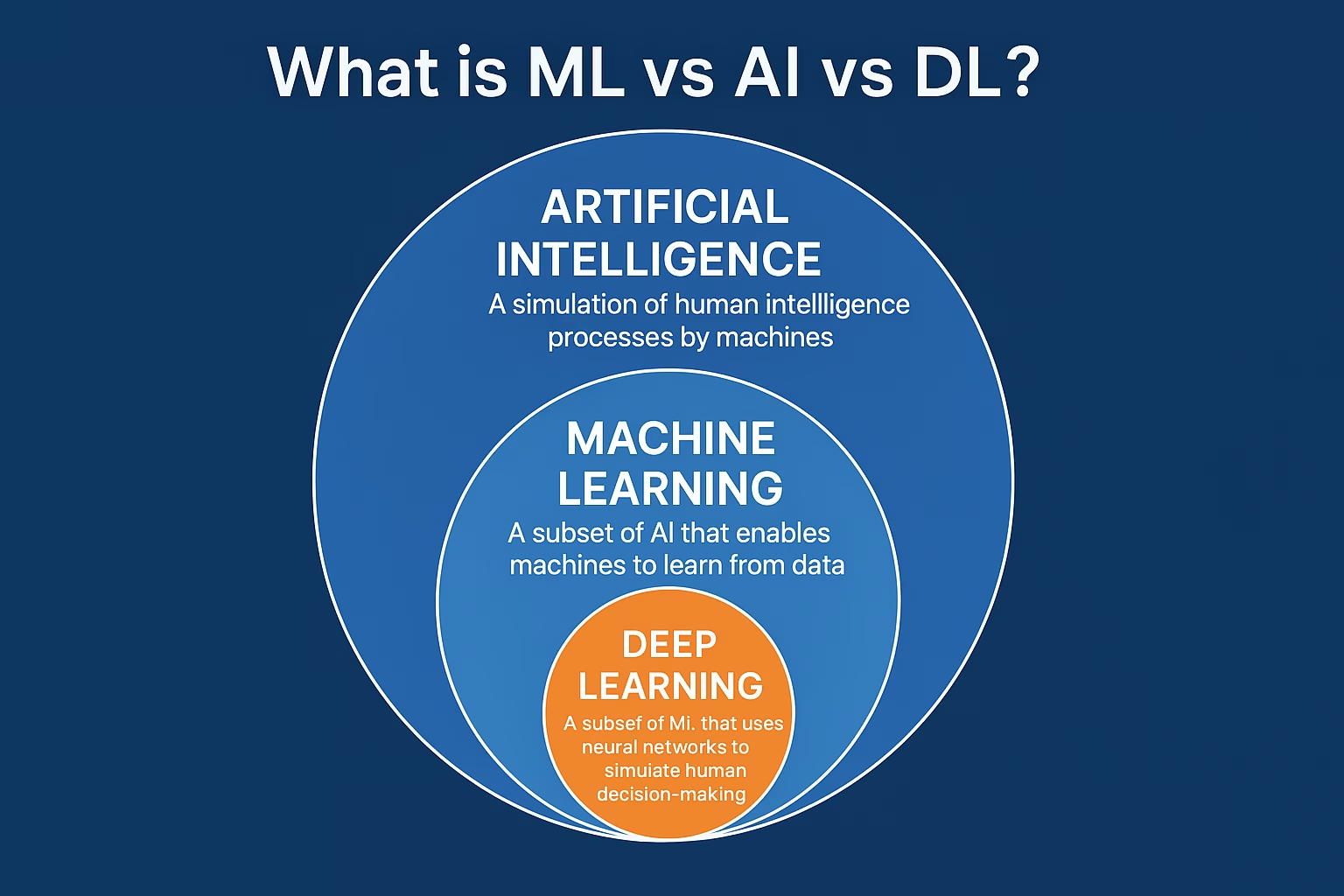

In the evolving landscape of artificial intelligence, the term computer vision regression label plays a crucial role in tasks that require continuous rather than categorical predictions. Unlike classification labels, regression labels in computer vision enable models to understand and predict numerical values — a capability essential for various industries ranging from autonomous driving to medical imaging.

With the rise in demand for high-precision and real-time predictions, understanding how regression labels work in computer vision has become more important than ever. This comprehensive blog will cover everything from foundational concepts to cutting-edge applications and data labeling techniques — making it your go-to guide for computer vision regression labeling.

Understanding Computer Vision Regression Labels

What Are Regression Labels in Computer Vision?

In computer vision, regression tasks involve predicting continuous numerical values from image data. A regression label refers to the ground truth numeric value associated with an image input, used for training deep learning models. This could include predicting the age of a person from a photo, estimating the depth of a scene, or determining the coordinates of an object.

How It Differs from Classification Labels

Classification assigns discrete classes such as “cat” or “dog”, whereas regression assigns real values like 5.6 meters or 27 years. The loss functions used are also different — Mean Squared Error (MSE) or Mean Absolute Error (MAE) are typical for regression, while cross-entropy is used for classification. This core distinction significantly influences model design and evaluation metrics.

Common Use Cases for Regression Labels

Regression labels are applied in:

- Pose estimation (predicting angles or coordinates)

- Facial analysis (age or emotion intensity prediction)

- Autonomous vehicles (distance to an object or lane curvature)

- Agriculture (crop yield estimation from satellite images)

These applications depend on high-precision continuous output, making regression labels critical.

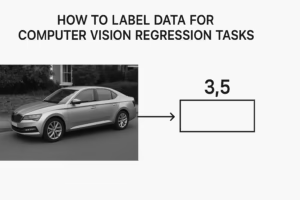

How to Label Data for Computer Vision Regression Tasks

Best Practices for Data Annotation

Unlike classification, where each image is tagged with a category, regression requires precise numeric values. This precision makes high-quality annotation more time-consuming and requires domain-specific knowledge. Using tools that support coordinate tagging, depth mapping, or pixel-level annotation is essential.

Tools for Regression Annotation

Popular tools include:

- Labelbox – Good for bounding box and point regression tasks

- CVAT – Ideal for frame-by-frame annotations in videos

- Supervisely – Offers plugins and AI-assisted labeling

The choice of tool depends on the task (e.g., keypoint prediction vs. object size estimation).

Data Normalization and Scaling

Before feeding data into models labels must often be normalized. For example, coordinate values might be scaled to the [0, 1] range. Normalization helps stabilize training and allows for consistent convergence in neural networks.

Deep Learning Models for Regression in Computer Vision

Architectures That Excel at Regression Tasks

Convolutional Neural Networks (CNNs) remain foundational for regression in computer vision. However, models like ResNet, Efficient Net, and Vision Transformers (ViT) are increasingly popular due to their depth and transfer learning capabilities. Unlike classification tasks where the final layer uses a softmax activation, regression tasks use linear activation to produce continuous outputs.

Customizing Loss Functions for Better Accuracy

Choosing the right loss function is vital. Common loss functions for regression include:

- Mean Squared Error (MSE)

- Mean Absolute Error (MAE)

- Huber Loss (robust to outliers)

Advanced techniques also involve dynamic loss weighting when multiple regression tasks are involved.

Handling Imbalanced or Noisy Labels

Noisy regression labels can significantly hinder model performance. Strategies include:

- Outlier removal using statistical thresholds

- Label smoothing

- Ensembling multiple annotations for consistency

Proper label hygiene is more critical in regression than classification due to the continuous nature of predictions.

Applications of Computer Vision Regression Labels in the Real World

Medical Imaging and Diagnostics

In healthcare, regression is used to estimate tumor volume, bone age, or organ sizes from CT or MRI scans. These quantitative metrics can support diagnostics, treatment planning, and monitoring disease progression with greater accuracy than qualitative assessments.

Autonomous Driving and Robotics

Regression labels are indispensable for self-driving cars. Tasks include:

- Estimating the distance to pedestrians or other vehicles

- Predicting steering angles

- Determining road curvature and incline

These applications require real-time performance with high accuracy to ensure safety and navigation efficiency.

Retail, Fashion, and Real Estate

Regression helps in:

- Estimating clothing size or fit from images

- Predicting shelf stock levels in retail outlets

- Valuing property based on exterior and interior imagery

These applications benefit from using historical data and visual cues to create accurate value predictions.

Challenges and Future of Computer Vision Regression Labeling

Data Scarcity and Annotation Costs

Since regression labels often require expert input or specialized tools (e.g., LIDAR or medical equipment), acquiring them is more expensive than classification labels. Synthetic data generation and self-supervised learning are emerging solutions to reduce dependence on manually annotated datasets.

Model Interpretability in Regression Tasks

Understanding why a model predicts a certain value is key, especially in regulated industries. Tools like SHAP (SHapley Additive exPlanations) and Grad-CAM (Gradient-weighted Class Activation Mapping) are being adapted for regression models to provide transparency and build trust.

Multi-Task Learning and Hybrid Models

Modern AI systems often combine regression with classification tasks. For example, a model may predict both the age and gender of a person from a single image. This approach improves performance by leveraging shared representations and reducing overfitting.

Optimizing Models for Deployment

Lightweight Models for Edge Devices

Deploying regression models on mobile or embedded systems requires optimization techniques like quantization, pruning, and knowledge distillation. These ensure that the model retains accuracy while fitting within memory and computation constraints.

Real-Time Inference and Latency

In scenarios like robotics or autonomous navigation, inference time is critical. Techniques such as ONNX conversion, TensorRT optimization, and GPU acceleration are employed to reduce latency without sacrificing model accuracy.

Monitoring Models in Production

Once deployed, regression models must be monitored for:

- Prediction drift (change in input distributions)

- Performance degradation over time

- Label feedback loops from user interactions

Active learning loops can be implemented to retrain models with real-world data.

Frequently Asked Questions (FAQs)

1. What is a regression label in computer vision?

A regression label in computer vision refers to a continuous numerical value assigned to an image, used for training models that predict quantities rather than categories.

2. How is regression different from classification in AI?

Regression predicts numeric values (like age or distance), while classification predicts discrete labels (like cat or dog). Different loss functions and evaluation metrics are used for each.

3. What models are best suited for computer vision regression?

Models like ResNet, EfficientNet, and Vision Transformers are commonly used. The final layers are typically adapted with linear activations for continuous outputs.

4. How do you annotate data for a regression task?

Annotation involves assigning accurate numerical values to each image. Specialized tools like Labelbox or CVAT are used, and normalization of values is often applied before training.

5. Can a model perform both classification and regression?

Yes, with multi-task learning. A single model can be trained to output both a classification label and a regression value, improving overall learning efficiency.